Testing Enterprise SSO: A Practical Developer Guide

TL;DR

- Most SSO failures come from subtle configuration gaps, such as missing attributes, incorrect assertion URLs, or certificate drift (IdP signing certificates change while the application still trusts an older certificate), rather than from application code issues.

- Use a default test organization and IdP simulator to reproduce login flows without depending on live enterprise IdPs or production environments

- Inspect authentication events, timelines, and payload summaries to understand how assertions, attributes, and signatures are processed step-by-step

- Rely on structured error codes and validation checkpoints instead of generic UI messages to pinpoint the exact failure layer

- Repeat the same authentication path after each change to confirm fixes and prevent configuration drift before enabling SSO for customers

An enterprise customer attempted to log in using Single Sign-On after weeks of internal testing. The login screen redirected correctly, the metadata appeared valid, and the certificates were already configured. Yet authentication failed with a generic “Authentication Error.” The UI message showed “default role not configured”, while the logs appeared incomplete. The IdP team confirmed their setup was correct, leaving the product team to compare payloads rather than ship features.

SSO failures rarely break loudly. They usually fail with partially correct configurations, redirects work, XML parses, and tokens arrive, but one small mismatch, such as attributes, assertion URLs, or roles, silently invalidates the flow. Because SSO spans two systems owned by different teams, reproducing the issue becomes slow and unpredictable.

This guide walks through a practical SSO testing workflow instead of theory. We will follow a real debugging journey using controlled testing flows, simulators, and protocol-level logs rather than generic setup instructions.

Common SSO Testing Failures That Look Correct but Break Authentication

SSO failures often hide behind “almost correct” configurations. In the earlier scenario, the error message read “default role not configured,” but that message did not reveal the underlying validation issue; it rarely tells the full story. Redirects may succeed, tokens may arrive, and XML may parse correctly, yet authentication still fails because one protocol expectation is slightly misaligned. These mismatches usually sit in metadata, attributes, or signing details rather than obvious code errors.

Most SSO testing issues fall into a few repeatable categories. Recognizing these patterns early helps teams debug more quickly rather than blindly inspecting payloads. Many of these failures produce identical UI errors, which is why structured testing and log visibility become essential rather than optional.

Frequent Failure Categories During SSO Testing

Protocol-level configuration issues

- Redirect URI mismatches between the application and the IdP configuration

- Assertion Consumer Service (ACS) URL errors

- Signature validation failures due to certificate rotation or incorrect keys

- Audience or issuer mismatches in SAML assertions

- Expired or cached metadata is causing stale configuration reads

Time-related issues

- Clock skew between the Identity Provider and Service Provider servers

User-state and authorization issues

- Missing mandatory attributes such as email, role, or NameID

- User state problems, such as inactive accounts or missing default roles

Why These Failures Are Hard to Detect

These issues rarely break the entire flow. They allow partial success: the request reaches the IdP but fails during validation or attribute processing. That partial success is what makes SSO bugs time-consuming, because the system appears functional until the final verification step rejects the assertion.

Reproducing SSO Failures Using a Controlled Testing Flow Instead of Live Customers

Uncontrolled testing creates inconsistent results. After identifying common failure categories, the next challenge is reproducing them reliably. Testing SSO against live enterprise IdPs often introduces delays, dependency on external teams, and configuration drift. Small changes in metadata, certificates, or redirect URLs can alter behavior between attempts, making debugging unpredictable and slow.

A controlled testing flow isolates variables and keeps the code path stable. Instead of rewiring configuration files or creating multiple environment branches, a dedicated testing organization with an IdP simulator lets developers run the same authentication logic repeatedly, changing only the test context. This makes failures repeatable rather than accidental and allows teams to validate login, attribute mapping, and error handling before customer onboarding.

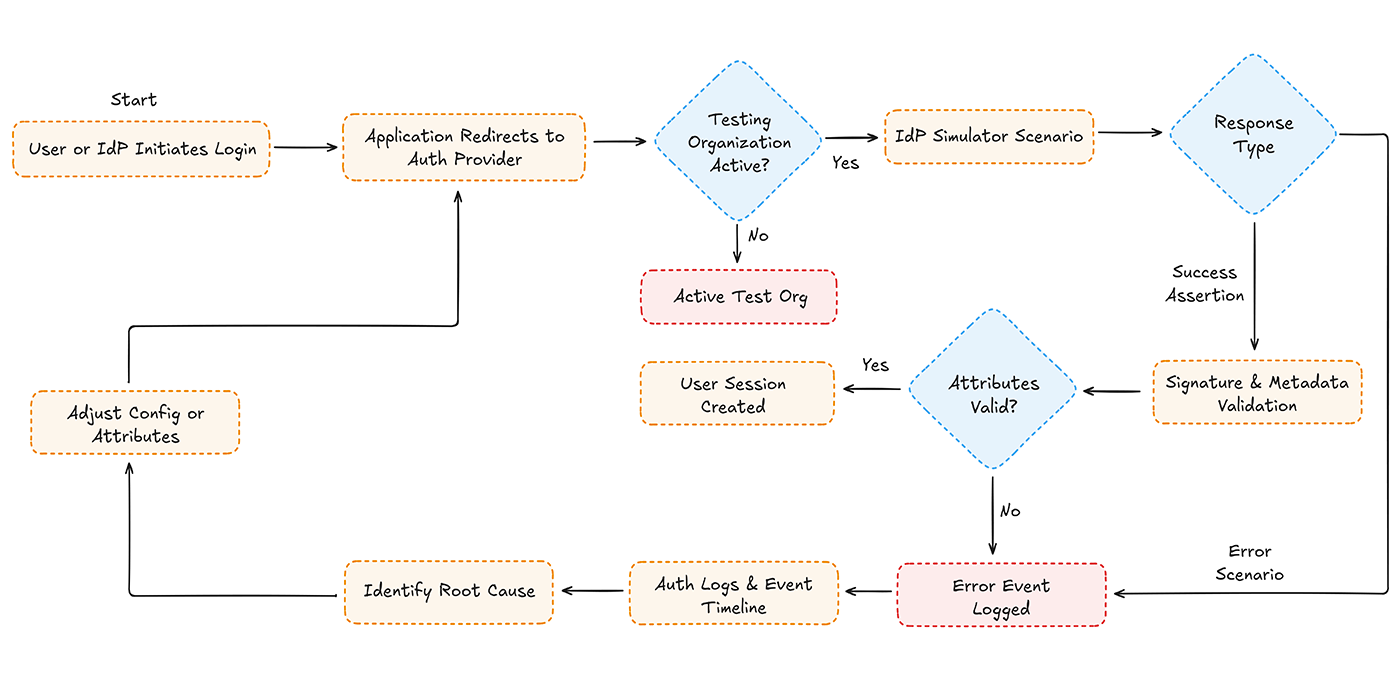

Structured Testing Flow

In our scenario, reproducing the failed login required a validation loop, log inspection, and configuration fixes rather than a single retry.

What This Structured Flow Enables During Testing

- Running user-initiated SSO without depending on external IdP teams

- Triggering IdP-initiated flows in a controlled environment

- Reproducing specific error states instead of waiting for them to occur randomly

- Keeping redirect URIs, certificates, and metadata constant across attempts

- Inspecting signature, attribute, and assertion validation results in each run

- Observing the full request → response → validation → log → retry cycle

This turns a one-time authentication failure into a repeatable validation process rather than a trial-and-error fix.

Inspecting Authentication Logs and Event Timelines to Identify Root Causes

Authentication logs reveal what the UI hides. In the earlier scenario, the login screen only displayed a default role not configured, but the real failure occurred earlier in the validation chain. UI messages usually summarize the final rejection, while logs show the entire sequence: redirect, assertion receipt, attribute validation, and authorization decisions. Without log inspection, developers often adjust the wrong configuration layer.

Event timelines make multi-step failures traceable rather than relying on guesswork. Rather than comparing raw XML or scattered console outputs, structured authentication logs present each step of the exchange in order. This helps determine whether the break occurred during signature verification, attribute mapping, or user authorization, rather than assuming the issue lies in the most recent visible error message.

Recommended: Explore Scalekit's docs to implement authentication in minutes.

Key Surfaces to Inspect During Log Analysis

- Request and Response Payloads: confirm assertion structure and parameters

- Attribute Mapping Results: verify email, role, and identifiers exist

- Signature and Certificate Validation Steps: detect key mismatches or expiry

- Event Sequence Order: ensure redirects and callbacks occur correctly

- User State Information: active status, role assignment, and permissions

- Timestamps and Clock Drift: detect token validity or expiry mismatches

Why Log-Driven Debugging Works Better Than UI Messages

Frequently Observed SSO Error Codes

Logs convert ambiguous UI feedback into actionable protocol details. Instead of repeatedly retrying the login, developers can observe validation steps, adjust only the failing layer, and rerun the same structured testing flow. This keeps debugging focused on evidence rather than assumptions.

Interpreting SSO Error Codes Instead of Guessing From UI Messages

After opening authentication logs, developers usually see structured error identifiers rather than friendly UI text. While the login screen may show generic messages such as Authentication Failed or User Not Authorized, the system records protocol-level error codes that pinpoint the precise break request formation, assertion validation, attribute mapping, or authorization logic. Reading these codes correctly prevents unnecessary configuration changes.

Mapping error codes to validation layers shortens debugging cycles. Instead of diffing entire payloads or blindly rotating certificates, teams can adjust only the configuration associated with the failing checkpoint. This shifts troubleshooting from exploratory trial-and-error to targeted validation and verification.

Frequently Observed SSO Error Codes During Testing

How to Use Error Codes During Testing

- Treat the code as a validation checkpoint indicator, not just a label

- Cross-reference the code with the event timeline stage

- Adjust only the failing configuration layer instead of multiple settings

- Re-run the same structured testing flow to confirm the resolution

Error codes transform ambiguous login failures into specific validation signals. Instead of asking “why did this fail?” developers can quickly identify which step rejected the assertion and verify the fix using the same repeatable test cycle.

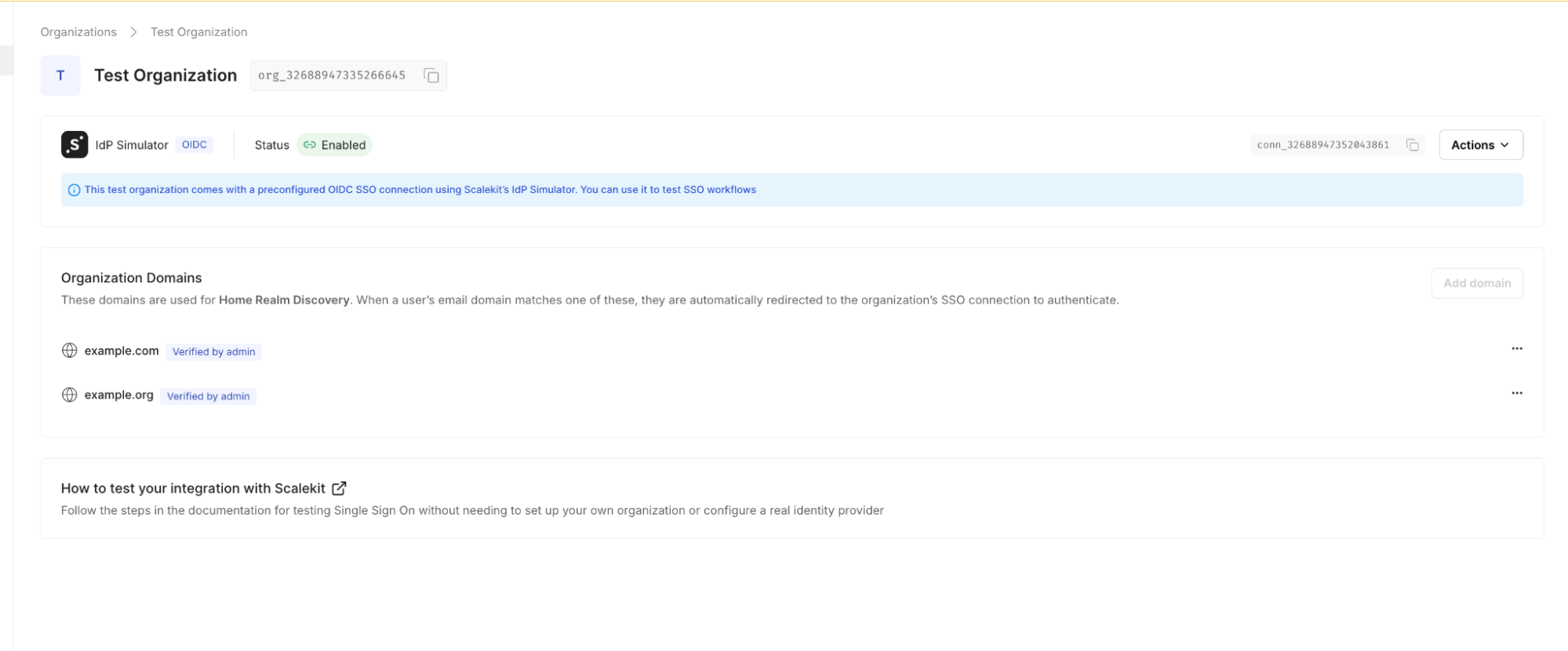

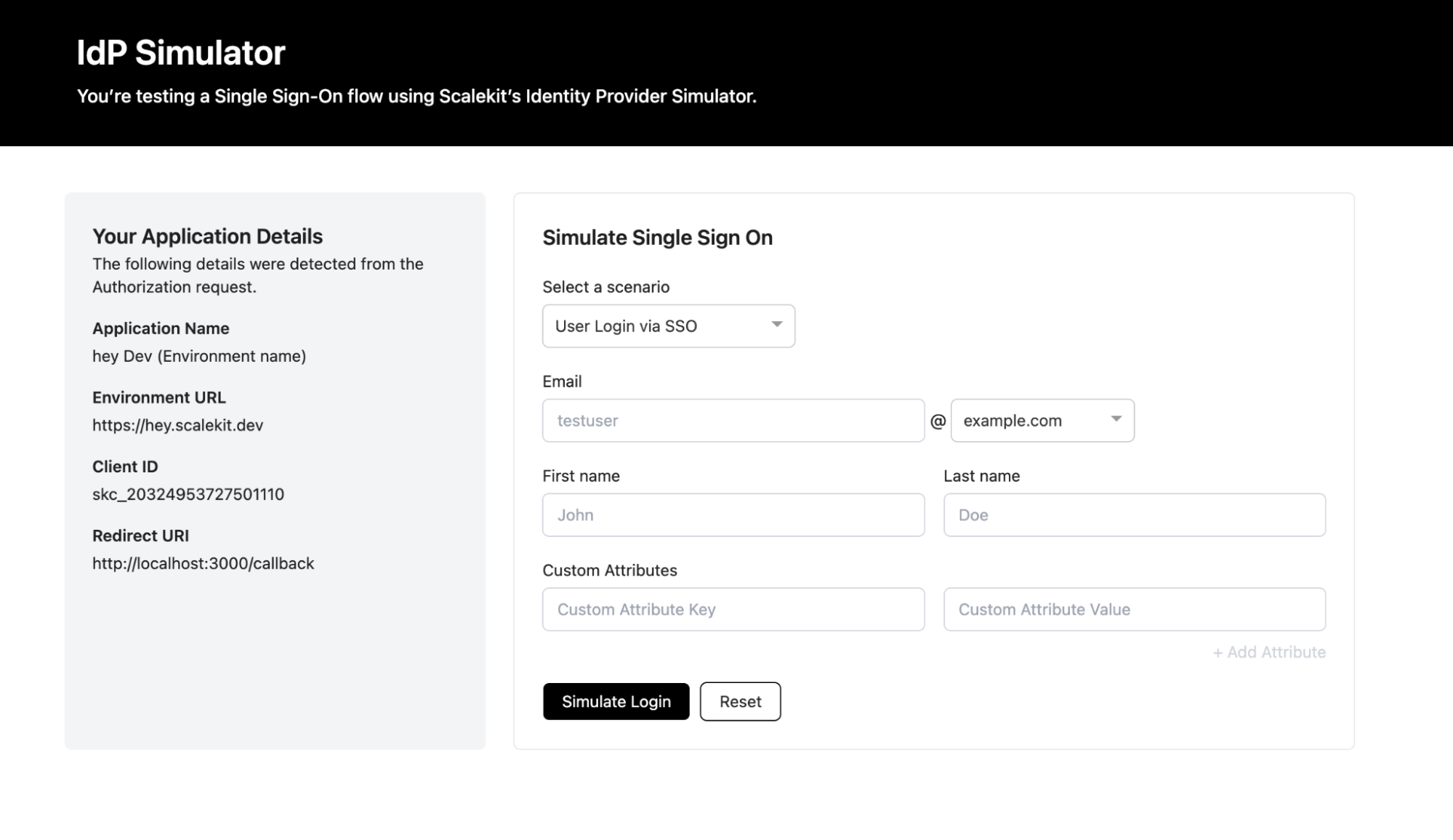

Using the Default Test Organization to Run SSO Flows Without Rewiring Code

Scalekit provides a default test organization that routes authentication through an IdP simulator. Instead of creating additional environments or duplicating configuration files, replace the production organization ID with the test organization ID provided. Once the test ID is active, authentication attempts are routed through the simulator without changing redirect URLs, certificates, or metadata files. The authentication path in the application remains the same, and only the organization context changes.

Recommended Scalekit Product Update: SSO Testing Simplified with New IdP Simulator

What You Can Test After Switching the Organization ID

- User-initiated SSO from the application login screen

- IdP-initiated SSO without external enterprise coordination

- Attribute validation cases, such as missing roles or emails

- Protocol error states, such as assertion URL mismatches or signature failures

- Repeated authentication attempts using the same configuration

Test Organization in Dashboard

The organization ID shown on the dashboard is used to route authentication through the simulator instead of a live enterprise IdP.

Once the test organization ID is available, it can be supplied when generating the authorization URL. This routes the authentication request through the IdP simulator while keeping the application’s redirect and callback logic unchanged.

The following example illustrates this using a Scalekit SDK-based authorization flow. The exact method may vary depending on the SDK or integration approach being used.

This approach allows the same login entry point in the application to initiate simulated SSO scenarios, including both successful authentications and intentionally triggered error cases, without changing production redirects or callbacks.

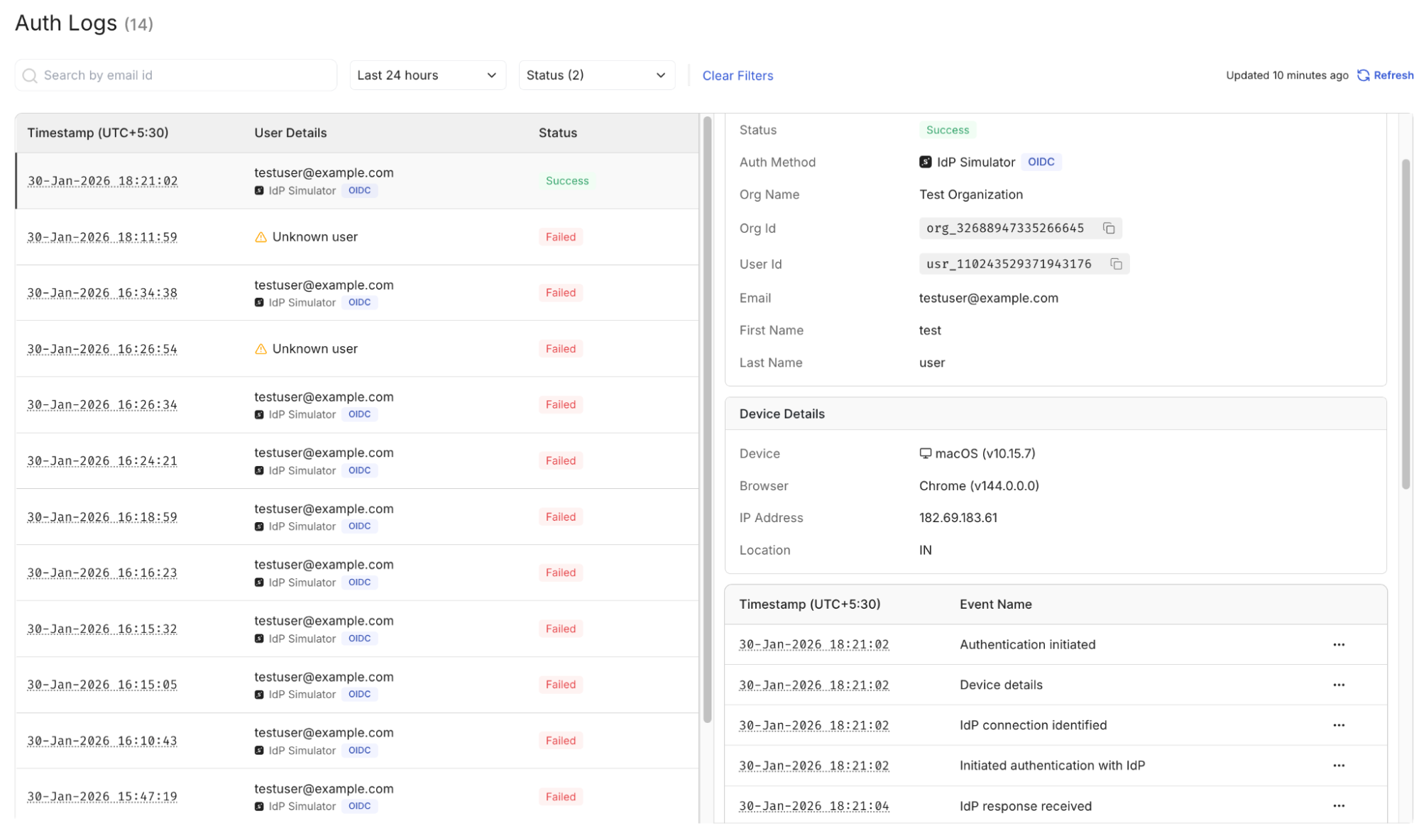

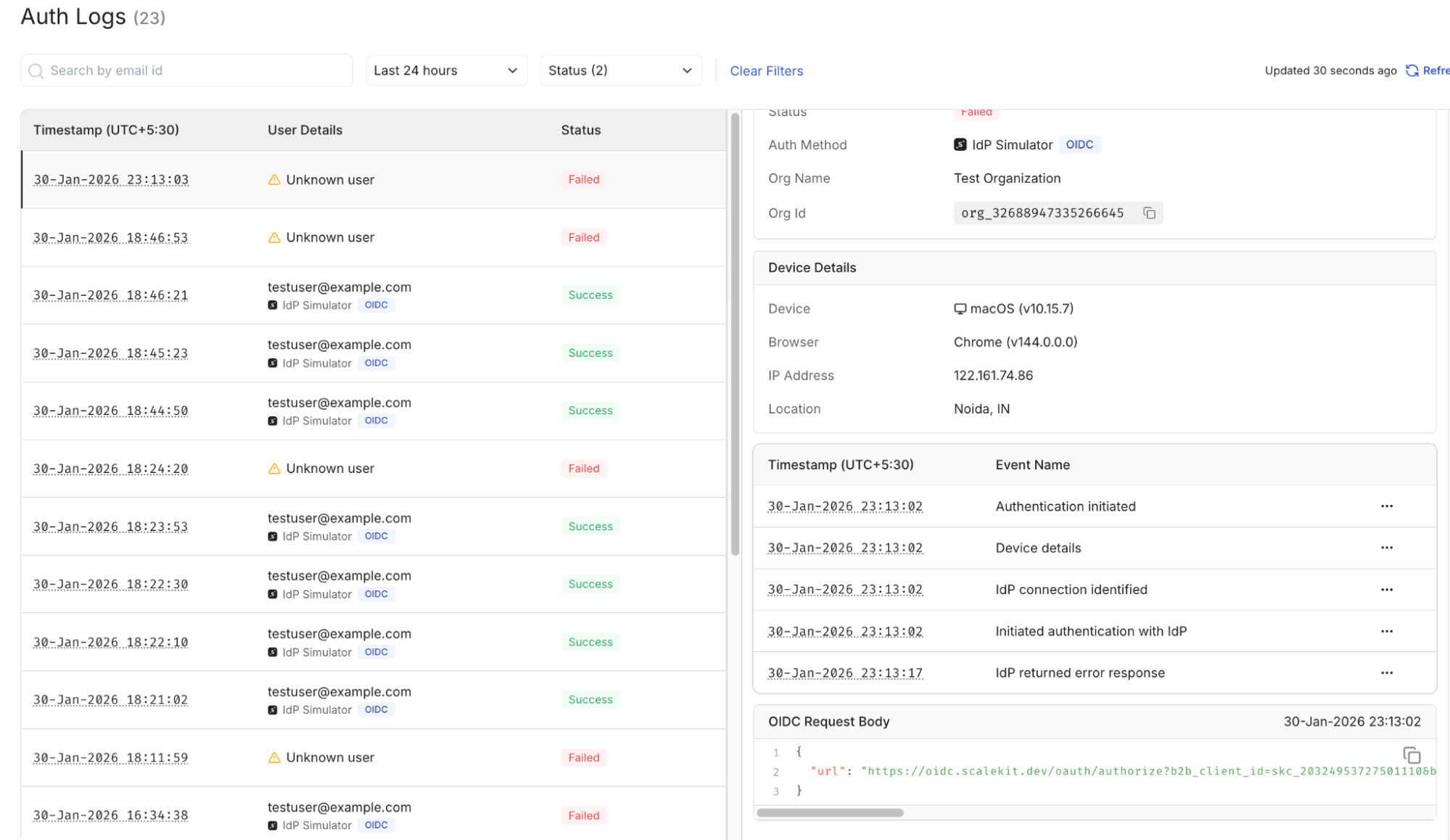

Inspecting Authentication Attempts and Error States in the Dashboard

After running an SSO attempt using the test organization, the next step is to review how the authentication exchange was processed. The dashboard surfaces each login attempt as an individual event, allowing you to inspect whether the request succeeded, failed during validation, or was interrupted mid-flow. This removes the need to rely only on browser errors or console logs.

Authentication attempts are typically grouped with timestamps, request identifiers, and status labels such as success, failure, or not completed. Selecting an event reveals the sequence of actions that occurred during the exchange, including redirects, assertion handling, and attribute validation results. Reviewing this view immediately after a test run helps confirm whether the observed behavior matches the expected outcome.

Common Fields Visible in an Authentication Event

- Status: success, failure, or pending

- Request ID / Event ID: unique identifier for correlation

- Timestamp: initiation and completion time

- User Identifier: email or mapped NameID

- Authentication Method: SAML or OIDC

- Organization Context: test or production organization

Opening a specific event exposes deeper protocol details such as request parameters, response payload summaries, attribute mappings, and validation checkpoints. This view is useful when a UI error message is too generic or when multiple login attempts produce different outcomes.

Reviewing these event views after each simulated login attempt lets you confirm that attribute values, assertion URLs, and signatures are processed as expected before moving to the production SSO configuration.

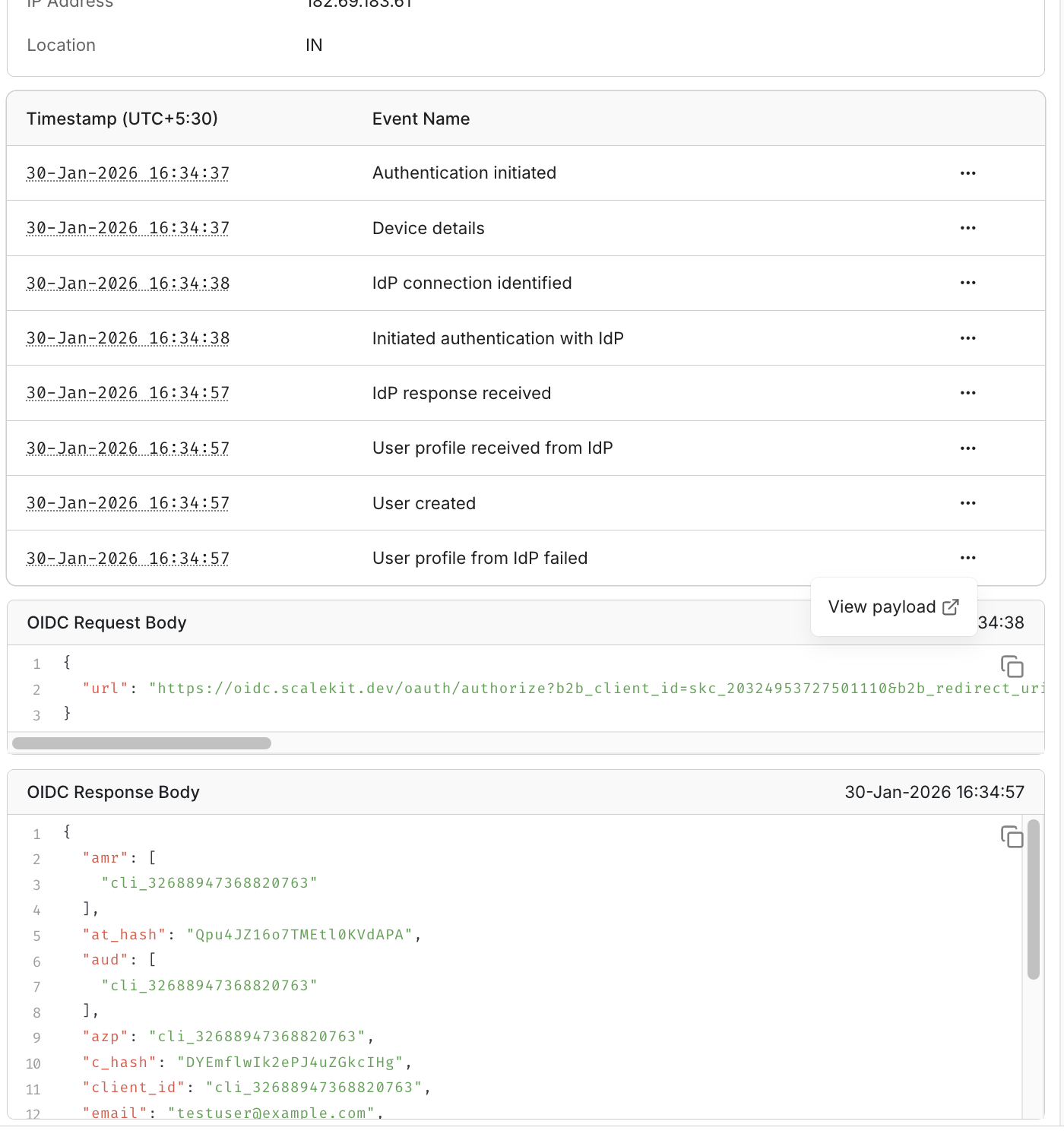

Reviewing Request, Response, and Attribute Payload Details

After opening an individual authentication event, the next step is inspecting the request and response payload summaries. This view shows the structured data exchanged during the SSO flow, rather than just the status label. Rather than reading raw XML or token strings, the dashboard surfaces key values such as identifiers, attributes, and validation outcomes in a readable format.

Payload inspection becomes necessary when authentication reaches the assertion stage but fails during attribute mapping or authorization. The objective is to confirm that expected fields, such as email, NameID, or default role, are present and correctly formatted before modifying certificates, metadata, or redirect URLs.

Recommended: Implement social logins for enterprise applications

Key Areas to Check in Payload Views

- Request Parameters – ACS URL, issuer, relay state

- Response Attributes – email, NameID, role, group values

- Assertion Details – audience, issuer, validity timestamps

- Signature Status – whether the assertion passed verification

- Attribute Mapping Output – how returned values map to user fields

Inspecting payload summaries immediately after each test attempt confirms whether the Identity Provider is sending the expected attributes and whether the application is interpreting them correctly.

Testing IdP-Initiated SSO and Intentional Error Scenarios

After validating user-initiated login and reviewing payload details, the next step is to test flows that start from the Identity Provider and intentionally trigger failures. This ensures the application handles authentication correctly, even when login does not begin from the product’s own login screen and when validation rules are not met.

IdP-initiated testing confirms that the Assertion Consumer Service endpoint, audience values, and attribute mappings are accepted when the redirect originates externally. Intentional error scenarios help verify how the system responds to missing attributes, incorrect assertion URLs, or signature mismatches without waiting for these conditions to occur during real customer onboarding.

What to Validate During IdP-Initiated Testing

- Assertion Consumer Service (ACS) URL handling

- Audience and issuer validation outcomes

- Attribute mapping consistency

- Default role or permission assignment

- Authentication event status visibility in the dashboard

Intentional Error Scenarios to Trigger

When simulating failures, change only one validation layer at a time so the resulting error can be traced to a single cause. Avoid combining multiple changes during a single test run.

- Remove a required attribute, such as email or role

- Modify the assertion URL to simulate callback mismatches

- Use outdated or incorrect metadata to test validation behavior

- Interrupt the redirect flow to observe incomplete authentication handling

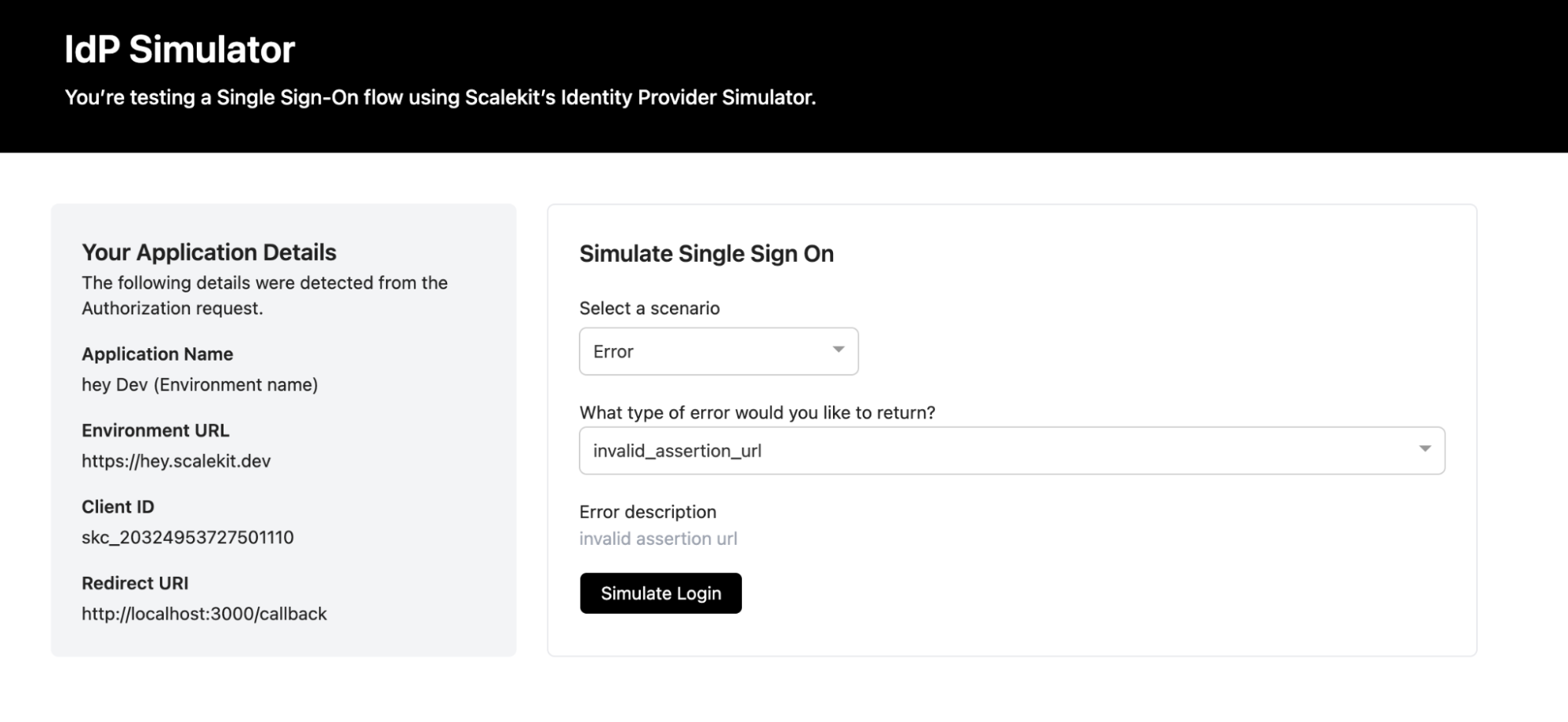

This view initiates authentication directly with the Identity Provider instead of the application's login screen, enabling external redirect validation.

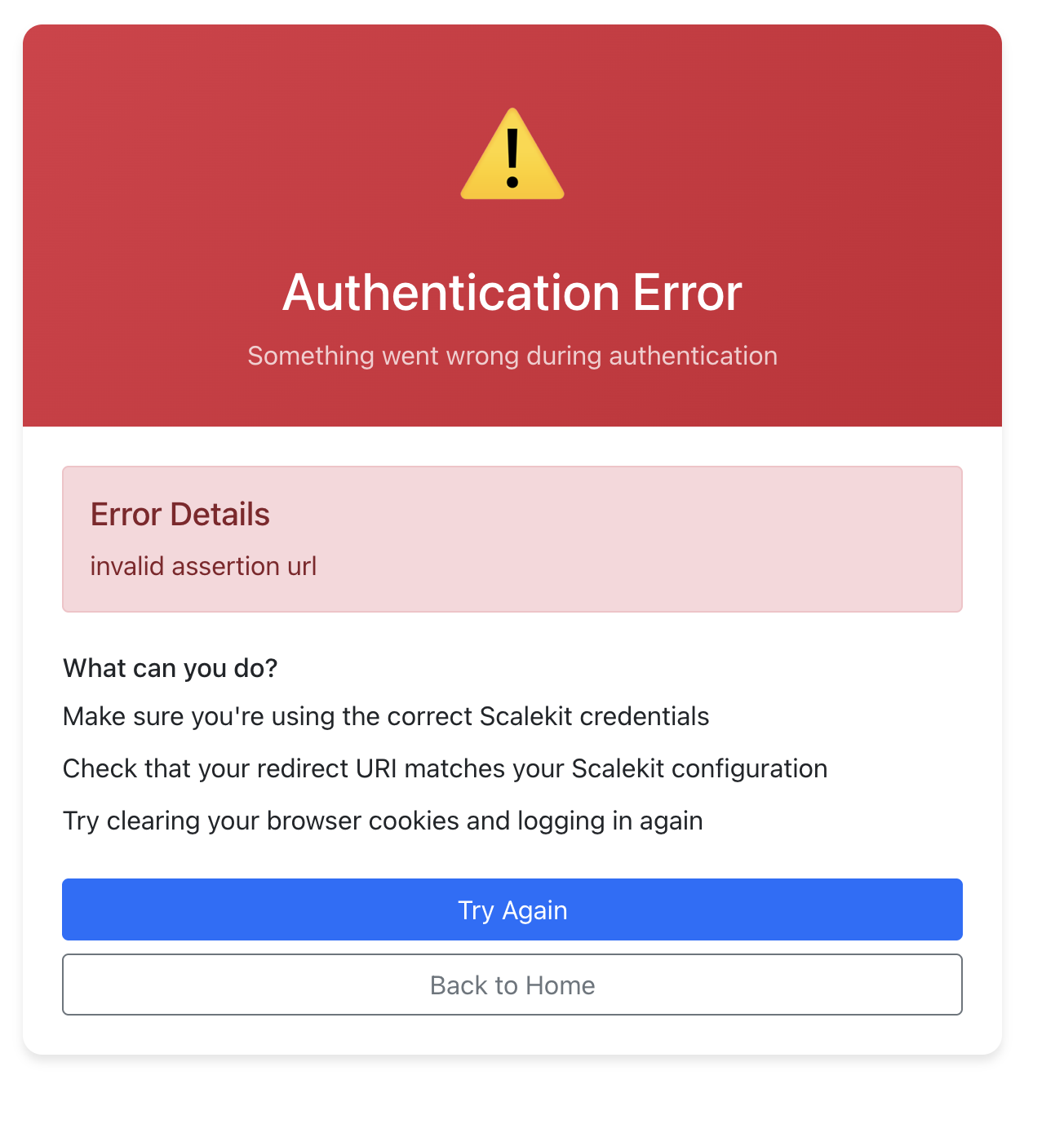

This screen shows a rejected authentication attempt with visible error codes and validation checkpoints.

This error is intentionally triggered using the IdP emulator to replicate a validation failure during testing and observe how it appears in the authentication logs.

Inspecting the log entry reveals which validation layer rejected the assertion attribute mapping, signature verification, or authorization.

Since this failure was intentionally created using the emulator, the next step is to remove or correct the simulated change rather than modify real production settings. Re-enable the removed attribute, revert the temporary change to the assertion URL, or switch back to the valid metadata used before the test.

Only the specific validation layer that was altered for simulation should be updated. Avoid changing multiple parameters at once, as the purpose of this step is to confirm that the same authentication path succeeds once the intentional error condition is cleared.

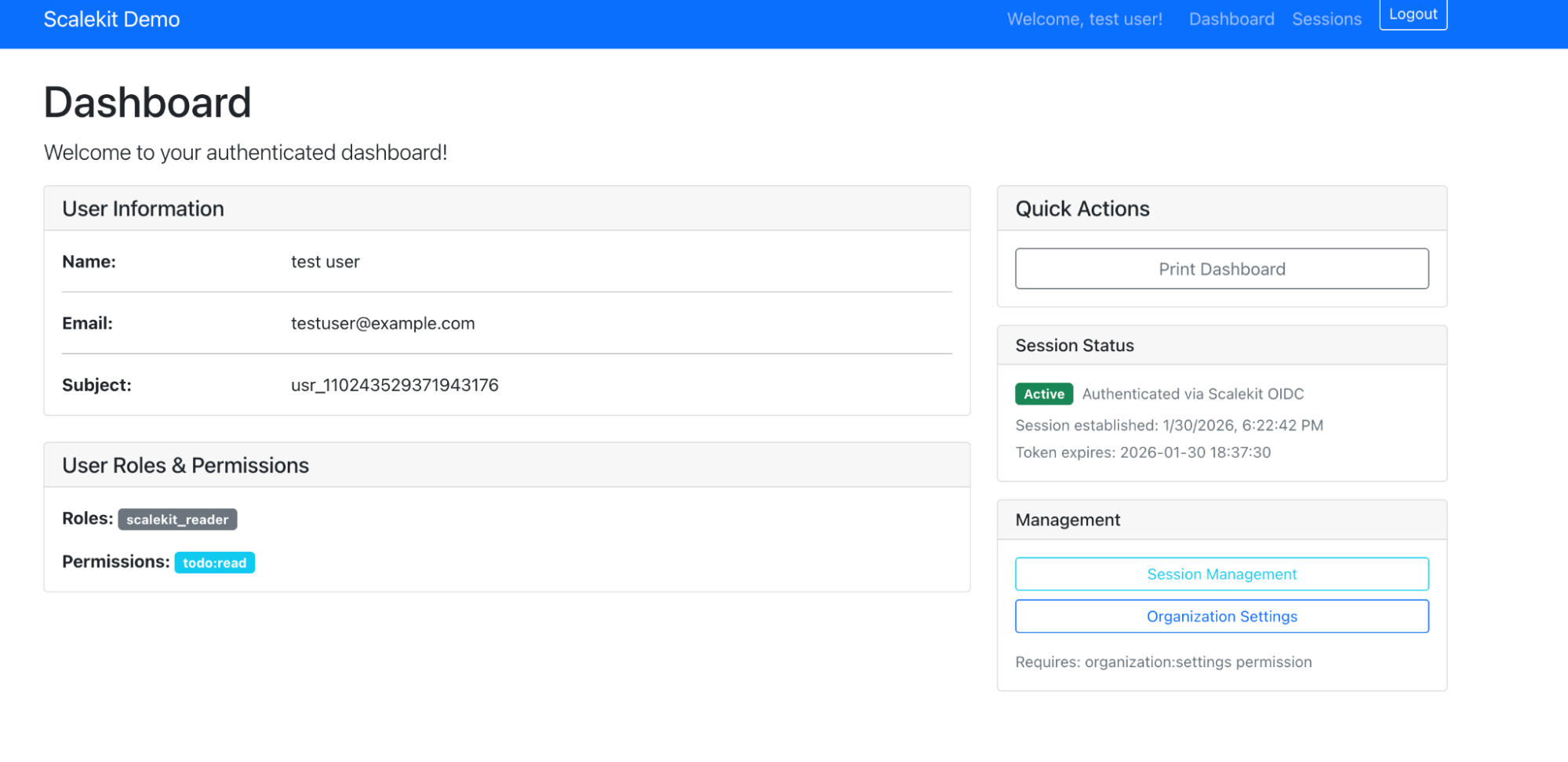

Re-running the same authentication flow after removing the simulated error condition should now result in a successful login. This confirms that the earlier failure was tied to the intentionally introduced validation change rather than a deeper configuration issue.

With both failure and success states observed under the same configuration path, the SSO testing loop is considered validated.

Final Verification Checklist Before Moving to Production

After testing user-initiated and IdP-initiated flows, as well as intentional error scenarios, the final step is confirming that the corrected configuration behaves consistently. This stage is verification, not exploration. The same test organization and authentication path that previously produced failures should now complete without attribute gaps, signature errors, or redirect mismatches.

The focus is on consistency across repeated attempts, not a single successful login. If one attempt succeeds while another fails under identical conditions, the configuration layer remains unstable. Verification ensures that assertions are accepted, attributes map correctly, and authorization rules apply without manual overrides.

Final Validation Checklist

- Redirect and callback URLs resolve without loops

- Signature verification passes with the current certificate

- Required attributes such as email and role are present

- Default role or permission assignment works as expected

- Authentication events show a complete success path

- Previously observed error codes no longer appear

- Both user-initiated and IdP-initiated logins succeed repeatedly

Running this checklist under the same organization context and configuration path confirms that the tested setup behaves predictably before switching back to the production organization.

Conclusion

SSO integrations rarely fail because of missing application logic. They usually fail due to minor configuration mismatches that only surface during real authentication attempts, such as missing attributes, incorrect assertion URLs, certificate drift, or role-mapping gaps. These issues can be confusing at first because redirects work and responses appear valid, yet a single validation layer silently rejects the assertion.

A structured testing approach changes how these failures are handled. Running authentication through a dedicated test organization, reviewing authentication events, inspecting payload summaries, and interpreting structured error codes provides visibility into each step of the exchange. Instead of repeatedly retrying the login or modifying multiple settings at once, developers can isolate the exact checkpoint that failed and adjust only the relevant configuration layer.

A stable SSO setup is defined by consistency rather than a one-time success. When the same authentication path succeeds repeatedly under controlled testing conditions, enabling SSO for production organizations becomes predictable instead of uncertain. The objective is not only to ensure login succeeds, but also to understand why it succeeds and to ensure the same validation path continues to behave correctly as configurations evolve.

Practical Takeaways

- Use a test organization to isolate authentication experiments

- Review authentication events and timelines after each attempt

- Inspect request and response payload summaries before rotating certificates

- Interpret error codes to locate the failing validation layer

- Re-run the same authentication path to confirm fixes

- Validate both user-initiated and IdP-initiated logins

A working SSO setup is defined by consistent results across repeated authentication attempts using the same configuration path that production users will follow, rather than a single successful login screen.

FAQs

1. What is a Test Organization used for in SSO testing?

A test organization provides an isolated environment to run authentication attempts without affecting production users. In platforms like Scalekit, login flows are routed through a simulator so developers can safely validate redirects, attributes, and error scenarios.

2. Do I need a real enterprise IdP to test SSO?

Not necessarily. Many SSO platforms, including Scalekit, provide an IdP simulator or test-connection feature that supports both SP-initiated and IdP-initiated flows without coordinating with an external identity provider.

3. Where can authentication failures be inspected during testing?

Authentication attempts usually appear in a dashboard as individual events with timestamps, request IDs, and status labels. In Scalekit, these events also include payload summaries and validation checkpoints for deeper inspection.

4. Why does the login fail even when the XML or token looks valid?

SSO validation includes multiple layers, such as signature checks, attribute mapping, audience matching, and timestamp verification. A well-formed response can still fail if one validation layer rejects it.

5. How can missing attributes like email or role be diagnosed?

Inspecting request and response payload summaries inside authentication events helps confirm whether required attributes were sent and correctly mapped before modifying certificates or metadata.

6. Can IdP-initiated SSO flows be tested without a customer IdP?

Yes. Simulators and test triggers allow developers to validate Assertion Consumer Service URLs, audience checks, and attribute handling without depending on a customer’s identity provider configuration.

7. When should SSO testing be repeated?

Testing should be rerun whenever certificates rotate, metadata changes, domains are added, or default roles are updated. Configuration drift can reintroduce previously resolved validation issues.

8. How do I confirm that an SSO configuration is stable?

A configuration is considered stable when repeated authentication attempts with the same flow and settings consistently succeed without triggering validation errors or missing-attribute warnings.

.png)