Should you build MCPs before APIs?

In 2025, AI agent compatibility is table stakes, irrespective of what you're building. And the tech giants are toeing the MCP line to support AI agents. What was once an experimental idea is quickly becoming the default for enterprise platforms.

Major dev tool and SaaS companies are on board: for example, Atlassian launched a remote MCP for Jira/Confluence to let Claude summarize and create issues securely (Cloudflare blog).

Stripe built an "agent toolkit" and hinted that MCP could become the default way services are accessed in the near future.

Cloudflare's platform alone has seen over 10 leading companies deploy MCP servers to bring AI capabilities to their users.

That brings us to a practical question every modern engineering team faces today: Should you stick with traditional APIs, or dive straight into using the Model Context Protocol (MCP)?

Let's unpack this together.

Short primer on MCP

Think of MCP as a universal language designed specifically for AI agents (If you want a done-to-death comparison, it's the "USB-C" of the AI agent era). It helps AI agents easily connect with different tools and data sources. MCP emerged because traditional APIs (like REST) weren't built with AI agents in mind. They were made for humans who read documentation and write code to connect applications manually.

MCP flips this around. It's built for AI-first interactions, allowing agents to dynamically discover available tools, maintain conversations over multiple steps, and handle complex tasks efficiently.

Why consider MCP-first?

Built for AI from day one

Building MCP-first bakes in AI-readiness. Your service exposes its capabilities (functions, data, workflows) through MCP so that AI assistants can use them autonomously. This future-proofs your product in an era where users increasingly rely on AI agents to interact with software. MCP ensures your product is "visible" to AI by providing a standard interface for real-time inventory, content, or services.

Standardization and consistency

MCP provides a universal, self-describing interface that every AI agent can understand. With MCP, an AI client can query any MCP-compliant server to discover available functions, their inputs/outputs, and usage examples at runtime (via endpoints like tools/list). On the other hand, traditional APIs all have custom endpoints, auth schemes, and docs that developers (or fine-tuned models) must handle separately.

Faster integration with more tools

Instead of writing custom API wrappers or function calls for each model integration, you expose a single MCP interface that any compliant AI client (Anthropic Claude, OpenAI functions, open-source agents, etc.) can tap into. Rather than juggling multiple APIs with varying formats, MCP allows a standardized format that allows for faster development, without the quirks of each specific API.

The payoff is quicker integration, not just with one AI, but an entire ecosystem of agent tools and AI clients that speak MCP. This way, your team spends less time grinding out API connectors and more time on core product features.

Thriving MCP community

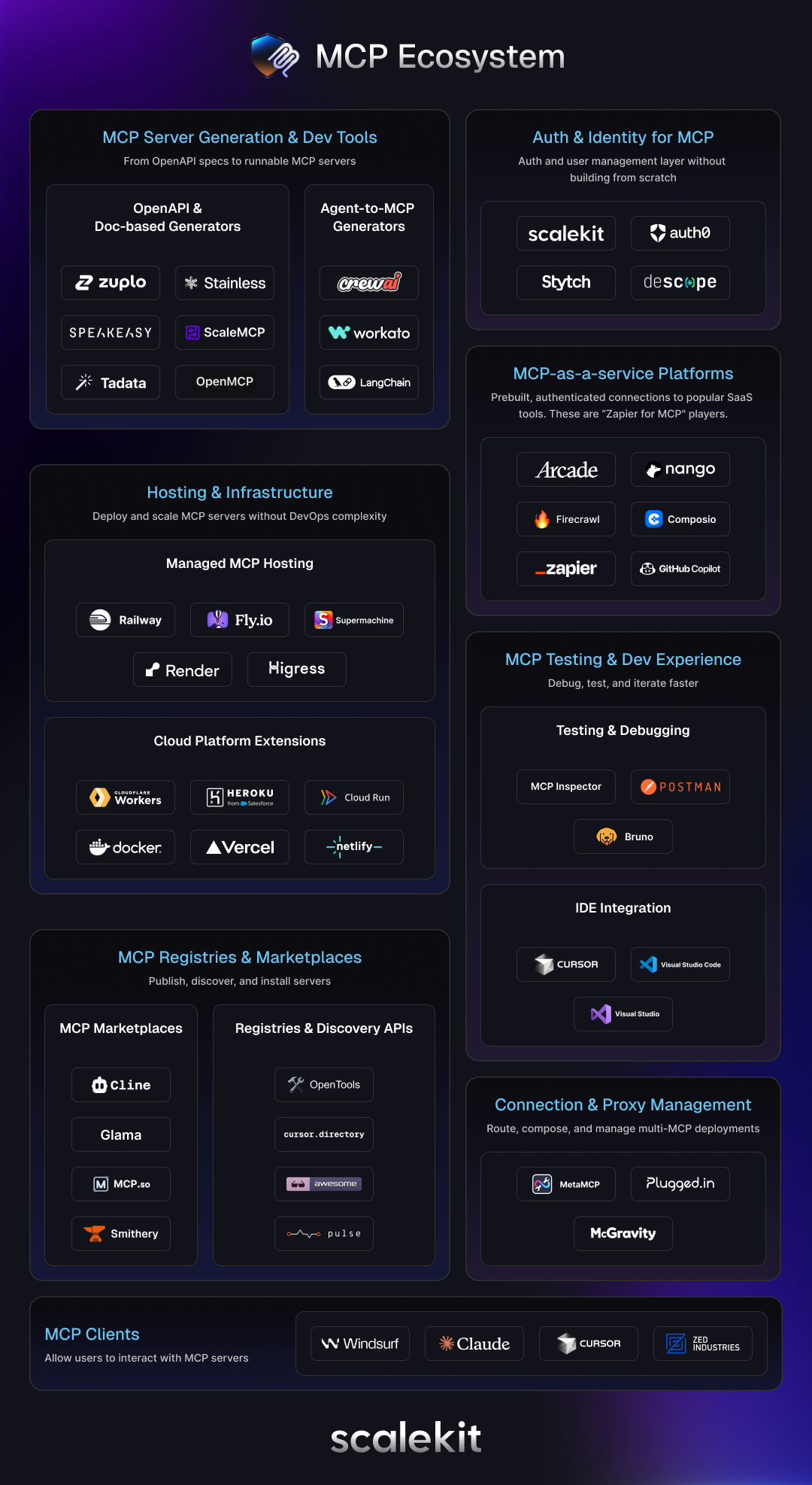

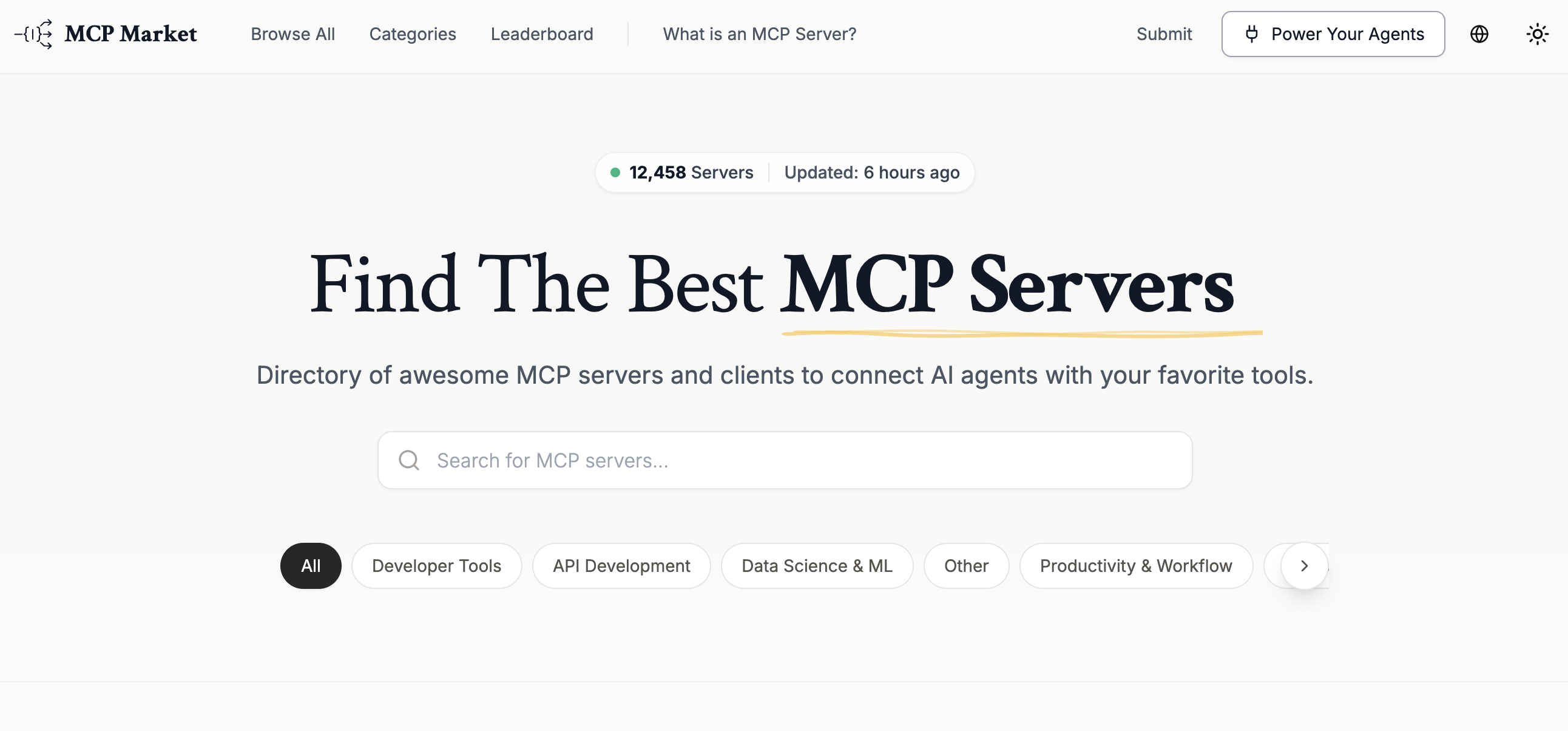

Going MCP-first aligns you with a surging industry momentum and community. Since Anthropic open-sourced the Model Context Protocol in late 2024, adoption has skyrocketed. (On a side note, check out our MCP market map here)

The open source community has been booming: MCP Market lists over 12,000 MCP servers; MCP.so has indexed over 16,000 MCP servers. In short, choosing MCP now means plugging into a rich and growing ecosystem of third-party libraries, forums, and partnerships. Developer communities on platforms like Discord and Reddit are actively buzzing with thousands of users.

Use cases for MCP-first

AI-native applications: If your product is inherently built around AI or agents performing tasks, MCP-first is a natural fit. These are tools designed from the ground up for AI-agent interaction rather than human-driven UIs.

Workflow automation and toolchains: Platforms whose value lies in chaining tasks across multiple tools or services should strongly consider MCP. MCP servers allow an AI agent to coordinate multiple tools in one continuous conversation, dynamically allowing it to determine what to use next.

Enterprise integration tools: Enterprise software that aims to connect and expose internal systems for AI consumption finds great strategic sense in MCP-first. Think of data integration hubs, API gateways, or internal developer platforms that large companies use. An enterprise integration server could use MCP to let an AI agent list available company data sources, fetch records, or trigger workflows, all while enforcing security centrally.

Devtools: Any product, like an API or service for developers, should weigh MCP-first, especially if those developers are building AI applications. By offering an MCP server, you give developers a plug-and-play way to incorporate your service in their AI/LLM workflows.

Why consider building APIs first?

Despite MCP's advantages, traditional APIs still make sense in several scenarios.

Mature and proven: APIs like REST have decades of development behind them, providing a highly stable, predictable environment. They are well-documented and have extensive support networks and communities.

Extensive tooling: The mature ecosystem around APIs offers sophisticated tools for security, monitoring, debugging, and scaling. Companies looking for reliable, battle-tested solutions may prefer traditional APIs.

Simpler integration needs: If your application primarily involves straightforward, predictable interactions, APIs offer quicker setup, easier maintenance, and lower upfront complexity.

API-first use cases

High-volume microservices: A financial services company relies on APIs to manage millions of daily transactions, benefiting from the straightforward scalability and robust performance of traditional REST APIs.

Compliance-heavy industries: EHR platforms (Electronic Health Records) like Epic and Cerner expose APIs via HL7 FHIR standards to ensure compliance with HIPAA and data security requirements.

Multi-platform e-commerce: Shopify's API-first approach enables merchants to sell across web, mobile, social media, and in-store channels from a single backend, with third-party developers building thousands of integrations that extend platform capabilities.

Global content delivery: Netflix uses APIs to orchestrate content streaming across dozens of countries, managing user preferences, content recommendations, and adaptive bitrate streaming while supporting multiple device types and regional content libraries.

Should you still build an API after building MCP?

In many cases, building APIs alongside MCP can be beneficial:

- Compatibility: APIs can ensure backward compatibility for existing customers and integrations.

- Redundancy: APIs provide a reliable fallback and support simpler interactions where MCP's advanced features aren't required.

- Flexibility: Having both MCP and API interfaces gives you more flexibility in how you expose functionality.

Case study 1: E‑commerce platform with an AI shopping assistant (MCP-first)

Challenge: ShopNow is a fictional mid-sized e-commerce platform that wants to offer shoppers an AI assistant for product recommendations and support. In early 2025, they noticed more consumers using AI chatbots to find products. The challenge was enabling an AI shopping assistant to query product catalogs, check inventory, answer policy questions, and even place orders, all without customers leaving the chat interface.

MCP-first decision: The team chose an MCP-first approach over a traditional API expansion. They already had REST APIs for their mobile app, but they realized an MCP server would make AI integration plug-and-play. By building an MCP layer, an AI assistant could dynamically discover ShopNow's "tools": e.g., search_products, check_inventory, get_shipping_options, place_order.

Implementation: The architecture they settled on placed the MCP server as a thin layer on top of microservices. It essentially acted as an orchestrator that mapped AI tool calls to internal API calls. For example, when the AI calls search_products with a query, the MCP server invokes the existing search API and returns results in the JSON format the AI expects. Importantly, they designed some multi-step tools for a better user experience. One tool, find_and_discount, would take a product query and user ID, then internally: search for the product, check the user's loyalty status via API, apply a discount if applicable, and return a personalized offer.

Results: Once deployed, ShopNow's MCP-first AI assistant was a hit. Users could ask things like "Find me a waterproof hiking jacket under $100 and available to ship today," and the agent would deliver precise recommendations, something that used to require manually filtering on the website.

Case study 2: Data analytics SaaS with hybrid interface (MCP development followed by API)

Challenge: DataInsights is building a SaaS analytics platform where users can run queries on their business data and create visualizations. They want to add an AI agent that can answer questions in natural language and generate charts, essentially a conversational BI assistant.

Hybrid approach: They chose a hybrid interface strategy: MCP for the AI assistant features, and traditional APIs for the rest of the platform. Rather than going all-in on MCP for every function, they identified which capabilities made sense to expose to an AI. They built an MCP server for the AI-facing tools. For the main web UI and third-party integrations, they built a REST API (which is more efficient for well-structured queries and bulk data).

Implementation: Under the hood, both the REST API and the MCP server funnel into the same business logic. For example, there's a core function to execute a SQL query and return results. The REST API exposes it as GET /query?sql=... for developers, while the MCP exposes it as a run_query tool that takes a query and dataset name for AI agents.

The MCP server was stateful in that it maintained the user's context (like which dataset they had currently selected, to allow the AI to omit that in follow-up questions). The web UI didn't need that because state is in the frontend, but for the AI agent, they used an MCP session to carry context between questions.

Performance impact: Purely on speed, the AI was slower and cost some compute. However, for more complex questions like "Compare this quarter's sales with the same quarter last year and explain the difference," the AI agent shone.

It automatically ran two queries, got the data, and produced a narrative and chart, something that would take a human much longer and maybe multiple tool usages. The team found that for single, simple queries, direct API calls were more efficient (and they continue to support those). But for multi-step analytical tasks, the MCP+AI combination drastically improved user productivity.

Decision matrix: When to build MCP vs API

Use this decision matrix to guide your choice between building MCPs or APIs:

- If your criteria align strongly with MCP-first, prioritize MCP.

- If they align with API-first, traditional APIs may be your best option.

- If your criteria span both columns, a hybrid approach could be ideal.

Final word

For a devtool startup wanting to attract developers and AI enthusiasts, MCP-first could be a key strategic differentiator (plus you might use your own product as an MCP use case). For an enterprise SaaS with many existing integrations, a cautious hybrid might make more sense initially, so you don't disrupt customers.

MCP-first development offers a compelling vision of software that's ready for the AI-centric world with dynamic discovery, standardized interactions, and agent-ready features built in.

FAQs

What is the Model Context Protocol for AI agents?

Model Context Protocol is a standardized interface designed to help AI agents discover and interact with tools and data sources autonomously. Unlike traditional REST APIs built for human developers, MCP focuses on machine readability and dynamic tool discovery. It acts like a universal language for the AI agent era, allowing disparate services to communicate without custom wrappers for every model. By implementing MCP, engineering teams can future proof their products for an increasingly agentic ecosystem where users rely on autonomous assistants to perform complex tasks across multiple enterprise platforms and data silos efficiently.

Should teams build MCP servers instead of traditional APIs?

The choice depends on your primary integration target and strategic goals. If you are building AI native applications or workflow automation tools where agents are the primary users, starting with MCP is highly advantageous for discovery. However, traditional REST APIs remain superior for high volume microservices, compliance heavy industries, and scenarios requiring mature monitoring or debugging tools. For many enterprise SaaS platforms, a hybrid approach is ideal. This involves exposing core business logic through both a REST API for standard developers and an MCP server to enable seamless AI agent interactions.

How does security and authentication work for MCP servers?

Security in the MCP ecosystem requires a robust authentication layer to ensure that agents only access authorized tools and data. Since MCP servers often act as orchestrators for internal microservices, implementing machine to machine or agent to machine authentication is critical. Architects should consider using standardized protocols like OAuth2 and OpenID Connect to manage identities. Scalable solutions like Scalekit help manage complex B2B authentication requirements, ensuring that when an AI agent calls a tool like check inventory, it does so within the security context of the specific enterprise user and organizational policies.

What makes MCP better for dynamic tool discovery?

MCP allows AI agents to query a server at runtime to identify available functions, their required inputs, and expected outputs via standardized endpoints like tools list. Traditional APIs require humans to read documentation and write specific integration code for every endpoint. With MCP, the interface is self describing, enabling AI models to autonomously understand how to use a service without prior fine tuning or manual configuration. This standardized format drastically reduces the integration friction for new agents entering an ecosystem, allowing them to chain multiple tools together to solve complex, multi step user requests.

Is the Model Context Protocol ready for enterprise deployment?

Adoption of the Model Context Protocol is growing rapidly with major players like Atlassian and Stripe already deploying MCP servers. While the protocol is newer than REST, its ability to centralize security and enforce compliance through a single AI interface is appealing to CISOs. Enterprise teams can build thin MCP layers over existing, compliant microservices to expose capabilities securely. This allows organizations to leverage AI agents while maintaining the strict data residency and governance standards required in regulated industries. Using an MCP server as an enterprise integration hub provides a controlled gateway for agentic workflows.

How can teams manage agent to agent authentication?

Managing agent to agent authentication involves implementing secure identity delegation and machine to machine credentials. As AI agents begin to interact with multiple MCP servers, architects must ensure that each request carries verifiable identity tokens. This often involves using Client Credentials grants or Dynamic Client Registration to manage thousands of unique agent identities across different enterprise environments. Solutions that specialize in B2B authentication provide the necessary infrastructure to handle these complex trust relationships, ensuring that every tool execution is logged, audited, and strictly limited to the permissions granted by the original human user or organization.

Does an MCP server impact application performance significantly?

Implementing an MCP server as an orchestration layer can introduce some latency due to the additional translation between the agent and internal services. For simple, single step queries, a direct REST API call is typically more efficient and cost effective. However, for complex analytical tasks that require multiple steps or data synthesis, the efficiency gain for the user is massive. The AI agent can coordinate several internal calls autonomously, delivering a complete answer instead of requiring a human to manually aggregate data. A hybrid strategy allows you to use REST for speed and MCP for capability.

Why is MCP considered a differentiator for devtool startups?

For devtool startups, being MCP first signals that your product is ready for the modern AI stack. It allows developers who are building AI applications to integrate your service as a plug and play tool without writing custom API connectors. This reduces time to value and places your service in the growing directory of thousands of MCP servers used by the open source community. By providing an agent ready interface, you lower the barrier for adoption in AI workflows, making your service a default choice for engineers who are prioritizing agentic automation over manual integrations.

Why is B2B authentication important for AI agent tools?

B2B authentication is the cornerstone of trust when exposing enterprise data to AI agents via MCP. Agents often operate on behalf of specific organizations, requiring the auth system to handle multi tenancy, SAML, and Single Sign On seamlessly. Without robust B2B auth, agents could potentially access sensitive information across different customer environments. Scalekit provides the necessary tools to implement secure, enterprise grade authentication for your MCP servers. This ensures that every agent interaction is authenticated against the correct tenant, adhering to organizational security policies and giving CISOs the confidence to deploy AI driven tools internally.

.webp)