Should you wrap MCP around your existing API?

Introduction to MCP Wrapping

Wrapping an MCP around an API is a technique that bridges your existing API with the world of AI agents and Large Language Models (LLMs) like Claude. By layering an MCP server on top of your current API endpoints, you enable AI agents to interact with external systems through a standardized, machine-callable protocol. This means that, without altering your underlying stack, you can empower LLMs to do more than just analyze or summarize data—they can take real action, such as creating, updating, or triaging support tickets, all through simple prompts.

The core idea is to expose your existing API as a set of MCP tools, each representing a specific function or workflow. By adding an MCP endpoint to your plain vanilla REST endpoint, you unlock AI muscle, allowing LLMs to seamlessly integrate with your business processes. This approach accelerates AI integration, making it possible to automate tasks, streamline operations, and connect with external systems, all while preserving the stability and reliability of your existing APIs.

Whether you’re looking to enhance customer support, automate IT workflows, or simply make your services more accessible to intelligent automation, MCP wrapping offers a practical, low-friction path to AI-powered capabilities.

Instead of rebuilding services, wrapping translates existing endpoints into structured tools with typed inputs, response templates, and security rules. In our earlier writeup on AI-native APIs, we explored when rebuilding makes sense, but for many teams, wrapping offers a faster, safer entry point.

Wrapping is a good fit when your API is stable, documented, and versioned. Tools like openapi-to-mcpserver let you convert OpenAPI specs into MCP configs quickly. It’s also helpful when timelines are tight, backend bandwidth is limited, or your AI integration is still in its early stages. Wrappers give you a way to expose specific functions without overhauling your stack.

For complex or legacy systems, wrappers act as a buffer. They isolate brittle internals while still making key functionality available to agents, with custom auth, error handling, and format normalization layered on top. Watch this video to understand how MCP shifts APIs from being 'called by code' to being 'understood and orchestrated by AI agents:'

In the rest of this guide, you’ll learn how to choose the right MCP wrapper architecture, generate tooling with minimal effort, avoid common pitfalls, and evolve from a quick prototype into something production-grade.

Choosing the right architecture for wrapping MCP around your API

What an MCP wrapper actually does

An MCP wrapper translates an existing API into a machine-callable interface that AI agents can use like functions. It exposes your endpoints as MCP tools, with clearly defined inputs (e.g., parameters and types), outputs (e.g., structured response templates), and security behavior. Each tool includes an input schema specifying the required parameters and their data types, enabling dynamic discovery and proper execution by LLMs.

Instead of manually coding each interaction, the wrapper acts as a declarative layer: it maps REST endpoints to callable methods, and each tool request is routed to the corresponding API endpoint for execution. The MCP wrapper serves as an API wrapper for easy AI agent interaction. It can also add optional logic like retries or transformation, and produces a schema that agents can discover and invoke. Crucially, it doesn’t change your backend; it just makes it understandable to structured AI clients.

For a step-by-step breakdown of how to convert your API into usable MCP tool definitions, see our detailed walkthrough on mapping APIs into MCP tools.

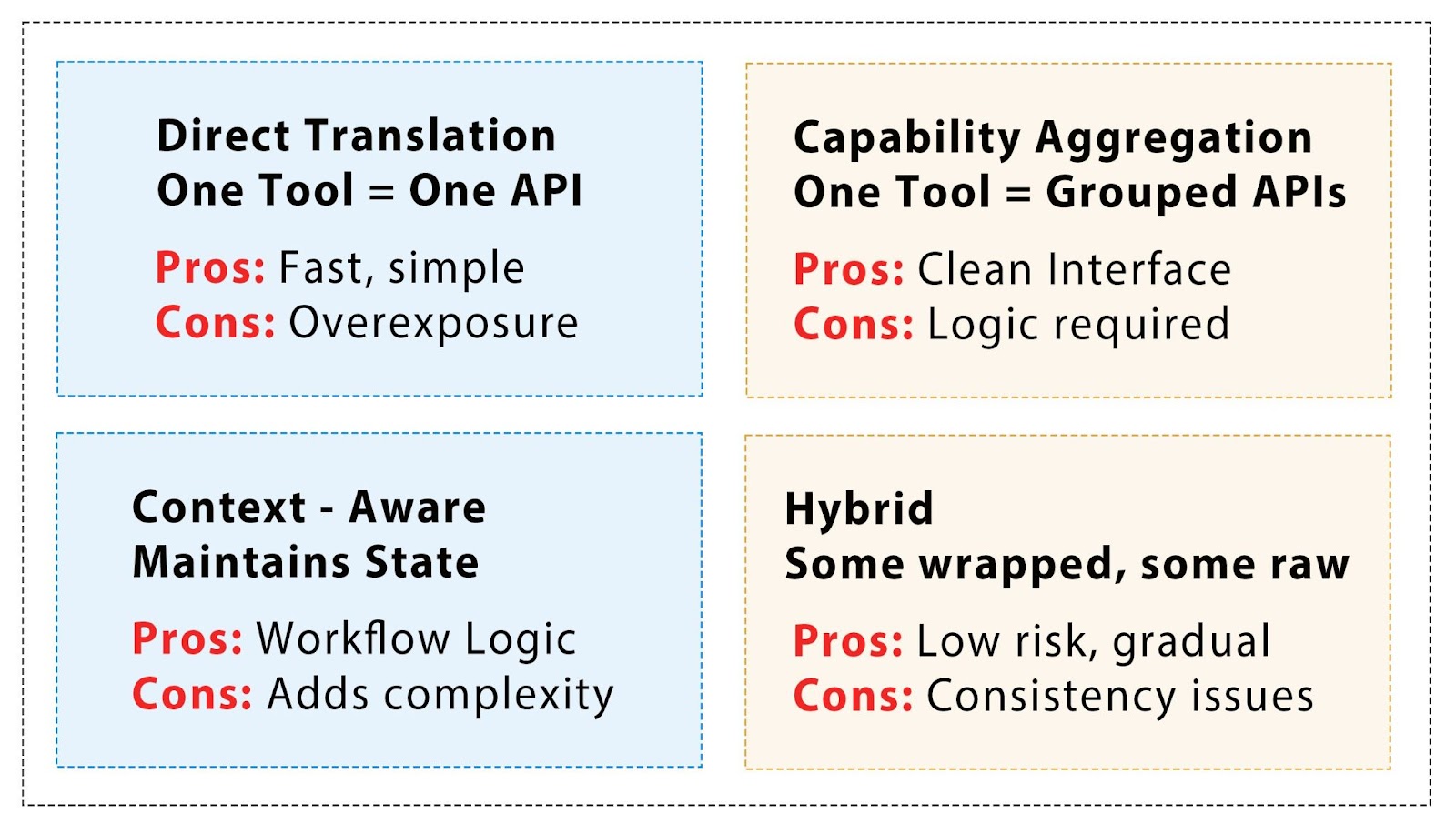

Once you’ve decided to wrap, how you wrap matters. The architecture you choose determines how cleanly AI agents interact with your backend services via the MCP layer and how much surface area you need to maintain over time. Tool calls are made by the AI agent to invoke specific functions exposed by the MCP wrapper. These four patterns cover most real-world MCP wrapper designs.

Direct translation: One API endpoint per tool

This is the fastest pattern to implement. Each REST endpoint becomes a separate MCP tool or function. It works best when your API is already well-structured around discrete actions, such as get_user_profile or cancel_invoice.

- Pros: Minimal wrapper logic, easy to automate via OpenAPI tools.

- Cons: Agents may be overwhelmed by too many low-level tools; inconsistent naming can leak through.

Use this if your API is already agent-friendly and the goal is to generate MCP tools quickly using OpenAPI-based automation, without building wrappers manually.

Capability aggregation: Group endpoints into semantic tools

Some APIs expose related functionality across multiple separate endpoints, like create_invoice, update_invoice, and delete_invoice. For agents, reasoning across these discrete tools can be noisy or unintuitive.

This pattern combines those related endpoints into a single MCP tool with multiple methods, for example, bundling them into an InvoiceManager tool.

- Pros: Cleaner agent interface, room for internal orchestration, and error handling.

- Cons: More design work; wrappers need careful logic and documentation.

Use the capability aggregation pattern when your API is fragmented and needs higher-level abstraction for agents to reason effectively.

Context-aware wrapping: Inject memory or workflow state

While capability aggregation simplifies APIs into higher-level tools, the context-aware wrapping pattern goes further. It adds lightweight memory or state tracking between steps, useful when agents perform multi-step flows like search → select → update. Stateless APIs can’t track session or user intent between these steps, so this pattern wraps them with session logic or intermediate storage. Additionally, incorporating node context helps define the environment or scope in which MCP tool functions operate, supporting more advanced workflows by integrating with server-side implementations or API endpoints.

- Pros: Enables more natural, conversational flows for agents.

- Cons: Adds complexity; needs logic for timeout, stale state, and reset behavior.

Use the context-aware wrapping pattern when your flows require chaining or short-term memory not natively supported in the API.

Hybrid wrapping: Mix MCP tools with passthrough calls

Unlike context-aware wrapping, which enhances logic and flow across endpoints, the hybrid pattern focuses on selective exposure. Only certain API capabilities are wrapped as MCP tools, while others remain as-is: either called internally from within the wrapper or accessed directly by trusted services.

This is often the go-to pattern for teams working with fragile legacy systems or experimenting with MCP in limited areas.

- Pros: Flexible and low-risk; lets teams experiment without major rewrites.

- Cons: Harder to maintain consistency; requires clear documentation boundaries.

Use the hybrid pattern when you want to test MCP integrations incrementally without overhauling your backend.

These four wrapper architecture patterns, direct translation, capability aggregation, context-aware, and hybrid, solve different problems depending on your API’s shape and your integration goals. Most teams don’t stick to just one. Instead, they blend patterns as needed: starting with direct translation for early pilots, layering in context or aggregation for better agent experience, and falling back on hybrid when certain systems can’t be safely exposed.

Choosing a pattern is one thing, deciding whether to wrap at all is another. If you're still weighing whether to build a wrapper or start fresh, here’s a quick comparison to help you assess where your team stands.

Should you wrap or rebuild? A quick checklist

OpenAPI Generators: Fastest path to MCP compatibility using existing schemas

If your API is already defined using an OpenAPI v3 spec, you can skip hand-authoring wrapper logic. OpenAPI-based generators allow you to convert REST definitions into fully structured MCP interfaces automatically. These tools extract operation metadata, input types, request templates, and response formats from your existing spec and generate MCP-compatible configurations that agents can use directly. To further clarify the process, code snippets are used below to demonstrate how to convert a running API into an MCP-compatible interface using OpenAPI generators.

openapi-to-mcp-converter: Turn OpenAPI specs into agent-usable MCP configs

The openapi-to-mcp-converter tool takes an OpenAPI v3 spec and generates a complete MCP server configuration. This includes structured tool definitions, typed arguments, request templates, and optional markdown-style response formatting to help AI agents interpret responses cleanly. Each tool is exposed by the MCP server at a dedicated endpoint, making it easy for agents to access and interact with specific functionalities. The MCP server exposes only the tools defined in the configuration, allowing control over which endpoints are accessible to agents.

It’s designed for teams with stable REST APIs and good OpenAPI hygiene, offering a fast path to MCP compatibility with minimal effort.

How the tool works

Input:

You provide a standard OpenAPI 3.0 specification describing your REST API.

Translation process:

The tool parses your endpoints and produces:

- MCP server metadata

- Tool definitions based on operationIds

- Argument types and parameter positions (query, path, body)

- Response templates with formatted field descriptions

- Optional authentication and request headers

Output:

A deployable mcp-server.yaml file that defines each tool and how it maps to your underlying HTTP API.

Example: Developer tooling API

Let’s say your internal platform API exposes endpoints like:

- GET /tools → List available internal tools

- POST /services → Register a new backend service

- GET /logs/{service} → Retrieve recent error logs for a service

Your OpenAPI snippet might look like:

After running:

The tool generates an MCP config like:

This configuration can now be exposed via MCP to agent systems or connected through gateways like Higress.

Key features

- Positional argument inference: Automatically detects whether each parameter belongs in the path, query string, or body.

- Security conversion: Converts OpenAPI securitySchemes and operation.security blocks into MCP-compatible auth templates.

- Patch support: Use --template to inject auth headers, standard metadata, or override default behaviors.

- Response formatting: Optional markdown-style summaries make the output easier for agents to interpret.

- Output format control: Supports both YAML and JSON outputs.

When to use this tool

- Your API is already described using OpenAPI

- You need MCP access quickly, without writing wrapper code

- You want to experiment with agent integrations using real data

Other tools worth knowing for MCP wrappers

While we went deep on openapi-to-mcp-converter, other tools follow different integration models that may be a better fit depending on your constraints.

Custom wrapper libraries: mcp-wrapper-sdk

This SDK-based approach lets you wrap backend logic in code, with full control over behavior.

- How it works: You define MCP tools using a wrapper library in your programming language (e.g., Node.js, Go), mapping functions to MCP-compliant inputs, outputs, and schema.

- Pros: Maximum flexibility. Supports custom error handling, business logic injection, caching, retries, and more.

- Cons: Slower to implement. Requires ongoing maintenance and familiarity with the SDK’s lifecycle.

- Use case: Ideal when you need logic across multiple endpoints, complex conditionals, or tight control over response shaping.

Universal API adapters: universal-mcp-adapter

A configuration-first alternative that doesn’t require code changes or generation.

- How it works: You write a YAML config that declares API endpoints, parameters, headers, response transformations, and security schemes. The adapter runs this config at runtime to expose your tools.

- Pros: No codegen step. Easy to update or modify tool behavior by editing YAML. Supports rate limiting, request metadata, and auth injection out of the box.

- Cons: Less dynamic than SDKs. Logic is declarative and limited to what the adapter supports.

- Use case: Great for teams that want to add structured MCP support quickly without maintaining wrapper logic in code.

These tools all rely on OpenAPI specs as a source of truth and offer tradeoffs in terms of output fidelity, extensibility, and runtime compatibility. If your priority is completeness and template customization, openapi-to-mcp-converter remains one of the most robust options.

API endpoint configuration

Configuring your API endpoints for MCP wrapping is all about making your existing functionality discoverable and actionable by AI agents. This starts with defining tool declarations—such as a create_ticket tool for ticket creation functionality—and exposing them through a dedicated MCP endpoint within your existing API.

The MCP server object acts as the central registry for these tool declarations, specifying each available MCP tool, its parameters, and expected behaviors. By implementing an /mcp endpoint, your API provides a dynamic list of available tools and their input schemas, enabling MCP hosts and clients to discover and interact with your services programmatically.

Developers can leverage tools like MCP Inspector to visualize the available tools, inspect their input parameters, and ensure that the MCP endpoint is correctly configured. This setup not only streamlines the process of adding new capabilities but also ensures that your API remains flexible and extensible as new requirements emerge.

By thoughtfully configuring your MCP server, tool declarations, and dedicated endpoints, you make it easy for AI agents to access and utilize your API’s full range of features—unlocking new possibilities for automation and intelligent integration.

Implementation walkthrough: Wrapping an existing API with MCP

Once you’ve chosen a wrapping approach and tooling, such as using OpenAPI-to-MCPServer for fast schema conversion, the rest comes down to clean execution. The MCP wrapper can be used for creating support tickets by integrating with a support API MCP tool, enabling seamless automation of support workflows. A customer support team can leverage the MCP wrapper to streamline the process of creating tickets and handling new support ticket requests directly through prompts and automated flows.

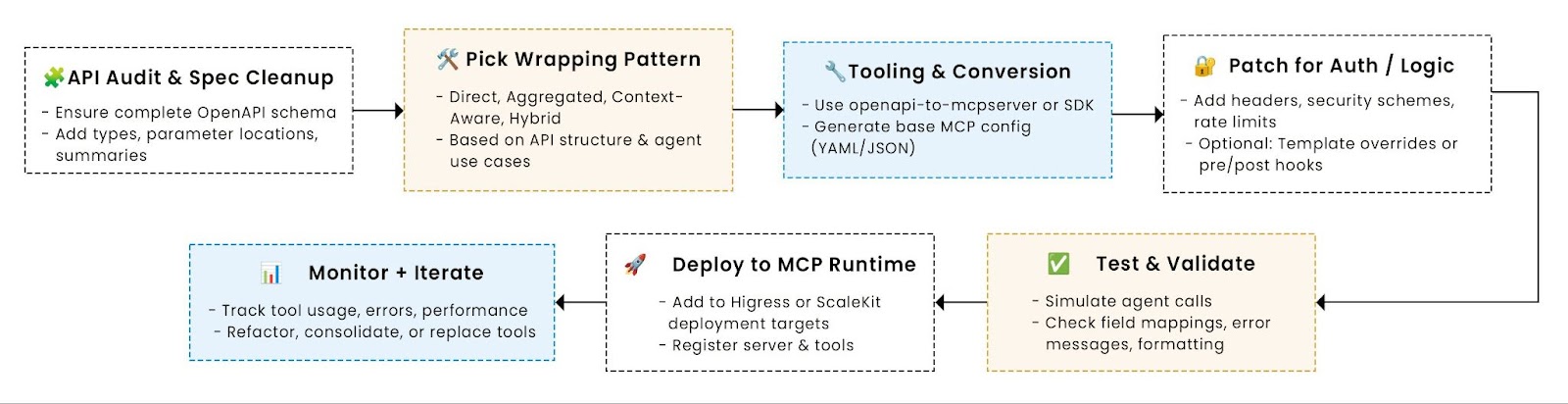

This section walks through the full lifecycle of wrapping an existing API for MCP compatibility, from assessment to deployment.

Step 1: Assess your API readiness

Start by evaluating your OpenAPI spec. Wrappers only work as well as the schema you feed into them.

- Check for missing descriptions, types, or parameter locations

- Verify all operationId fields are unique and meaningful

- Confirm response formats reflect actual API behavior

If your spec is incomplete, fix it first; tools like openapi-to-mcpserver rely heavily on accurate metadata to generate valid tool configurations.

Step 2: Choose your wrapping tool and format

If your priority is speed and you already use OpenAPI, tools like openapi-to-mcpserver are ideal. For deeper control (e.g., chaining multiple endpoints), SDKs or config-driven wrappers may work better. In this walkthrough, we assume you’re using a generator like openapi-to-mcpserver to create a YAML-based MCP server config.

Step 3: Convert schema into MCP-compatible configuration

Run your tool with the spec input and output location. The resulting file will include:

- Server metadata: Name, base URL, security schemes

- Tool definitions: One per endpoint, named via operationId

- Parameter handling: Automatic position mapping (path, query, body)

- Request/response templates: Optional formatting for agent readability

Make sure to review and edit the generated config. This is where you clarify tool descriptions, trim overly verbose examples, and normalize naming.

Step 4: Handle edge cases and auth

Use template overrides to insert logic the spec doesn’t cover, like:

- Headers for authentication (e.g., API keys, bearer tokens)

- Rate limits or retries

- Security requirements tied to individual tools

If your API uses multiple auth types, define them in components.securitySchemes and reference them via requestTemplate.security. The generator will convert these into usable blocks inside your MCP config.

For a complete walkthrough on how to secure your wrapped MCP server with OAuth, including metadata setup, JWT validation, and scope enforcement, see our complete OAuth implementation guide.

Step 5: Validate and test the output

Before exposing anything to agents:

- Use your MCP runtime’s schema validator (if available)

- Manually test a few tools to verify request/response mappings

- Sanity-check input handling, especially for body and path params

- Confirm the response templates make sense for LLM consumption

If you're deploying this to a Higress-based gateway, ensure the MCP server config is registered properly and routing works as expected.

Step 6: Monitor post-deployment

After go-live, track:

- Tool usage patterns (which endpoints agents actually use)

- Error rates (especially malformed inputs or auth failures)

- Latency or load issues introduced by the wrapper layer

It's also important to monitor tool behavior to ensure MCP tools operate safely and as expected. This feedback helps you decide whether to simplify, enhance, or eventually replace the wrapper with native MCP logic.

A good first implementation sets the foundation for future tool evolution. Keep the scope tight, validate thoroughly, and treat the wrapper config as real infrastructure, even if it started as a quick experiment.

Security and safety considerations

Security and safety are crucial when wrapping APIs with MCP. Since MCP servers expose powerful automation capabilities to AI agents and external systems, you need to implement robust authentication and authorization mechanisms. Techniques such as OAuth and API keys should be used to ensure that only authorized clients can access sensitive endpoints and perform critical actions.

Beyond access control, your MCP wrapper should be designed with comprehensive error handling in mind. This includes providing clear, informative error messages that help both developers and AI agents understand what went wrong, while also preventing the leakage of sensitive internal data. Proper error handling not only improves the reliability of your MCP tools but also enhances trust and safety for all users.

By prioritizing security and safety in your MCP server and tool configurations, you create a trustworthy bridge between your existing APIs and the world of AI integration—enabling seamless, secure interactions with external systems while safeguarding your data and operations.

Next, we’ll look at the common pitfalls teams hit during wrapping and how to solve them cleanly.

Common pitfalls when wrapping APIs with MCP, and how to avoid them

Even with solid tools and a mature API, wrapping for MCP isn't always plug-and-play. Teams often hit snags that aren’t obvious until runtime, especially when API assumptions collide with how MCP agents behave.

In addition to security and safety, it’s important to consider compliance with the EU's AI Act and other regulatory frameworks when exposing APIs to AI agents.

Below are the most common failure points, along with strategies to catch and fix them early.

Authentication mismatches between API and MCP runtime

Many APIs rely on API keys, tokens, or OAuth flows. MCP tools, on the other hand, often operate with different auth patterns, like session-based keys or user-context delegation. If the wrapper doesn’t translate this cleanly, agents get blocked before they even reach the business logic.

Fix:

Use requestTemplate.headers or requestTemplate.security in your MCP config to inject the required auth headers. With tools like openapi-to-mcpserver, you can define these globally via patch templates and customize them per tool if needed.

Also, validate whether auth tokens expire, need refresh logic, or must be scoped per user or per call.

For a full walkthrough on configuring secure, agent-compatible wrappers, including OAuth, API keys, and scoped credentials, check out Scalekit’s MCP OAuth guide.

Unexpected response formats or inconsistent data shapes

Agents rely on predictable response structures to reason correctly. If your API returns overly verbose objects, nested blobs, or inconsistent fields, MCP tools might surface confusing or unusable data.

Fix:

Use responseTemplate blocks to:

- Strip irrelevant metadata

- Flatten nested fields

- Add human-readable field descriptions that make the response more agent-friendly

This is especially important for legacy APIs that weren’t built with LLMs or structured agents in mind.

Rate limiting collisions between agents and upstream APIs

MCP runtimes can make requests more aggressively than typical frontend users. If your underlying API has strict rate limits, agents may trigger throttling errors unintentionally.

Fix:

Throttle calls at the wrapper level using your gateway or server configuration. For tools like Higress, you can define request limits per tool or route. Also, consider caching GET endpoints when appropriate.

Don’t rely on the API to protect itself; handle it proactively in the MCP layer.

Incomplete or misleading error handling

Your API might return a 500 for business validation issues, or bury error details deep inside a JSON blob. If those aren’t mapped properly in your wrapper, agents can’t respond correctly, or worse, think the request succeeded.

Fix:

Map common API errors to structured MCP error responses. Normalize error messages, expose only what’s relevant to the agent, and avoid leaking internal stack traces. Use consistent HTTP status codes where possible (e.g., 400 for bad input, 403 for permission issues).

Wrapper performance overhead

In some architectures, wrapping adds latency, especially if each tool hits multiple services or adds heavy response formatting. This might be negligible at low scale, but becomes noticeable under agent-heavy workflows.

Fix:

Benchmark wrapped endpoints under realistic load. If needed, optimize the wrapper by:

- Caching common responses

- Trimming large payloads

- Preprocessing data where possible

Use metrics from your MCP gateway or runtime to spot bottlenecks, not just application-level monitoring.

Catching these issues early makes the difference between a functional prototype and a production-ready MCP interface. In the final section, we’ll look at what happens after launch and how to evolve your wrapper over time without losing stability.

Maintaining and evolving your MCP wrapper over time

Wrapping your API for MCP access is often the fastest way to get started. But once agents are using it in production, the wrapper becomes part of your infrastructure, and you’ll need a plan to keep it healthy, performant, and relevant as both your API and agent usage evolve. Integrating new AI tooling, such as large language models with your existing APIs, can further enhance business processes and automation, enabling the creation of autonomous workflows and empowering your organization to fully leverage AI capabilities.

Version management: Keep your wrapper in sync with API changes

Even stable APIs evolve, with new fields, renamed parameters, and deprecated endpoints. If your wrapper doesn’t stay aligned, tools can break silently or behave incorrectly.

Strategies:

- Treat the MCP config as version-controlled infrastructure (ideally in the same repo as your API or spec)

- Re-run generators like openapi-to-mcpserver after each API release and diff the output

- Use the tool-prefix or server-name flags to generate multiple MCP versions during migrations.

Version mismatches are the most common post-launch failure mode, so bake in review and re-generation into your release process.

Performance monitoring: Watch the wrapper like any other service

Wrappers often introduce subtle performance issues, redundant API calls, bloated responses, or unmonitored retries. And because MCP tooling sits between the agent and your backend, it’s easy to miss these until they cascade.

Metrics to track:

- Response latency at the wrapper layer

- Error rates by tool/function

- Agent call volume by endpoint

- Rate limit hits or auth failures

Use these to prioritize optimization work, especially if agents start chaining tools or using them more aggressively than expected.

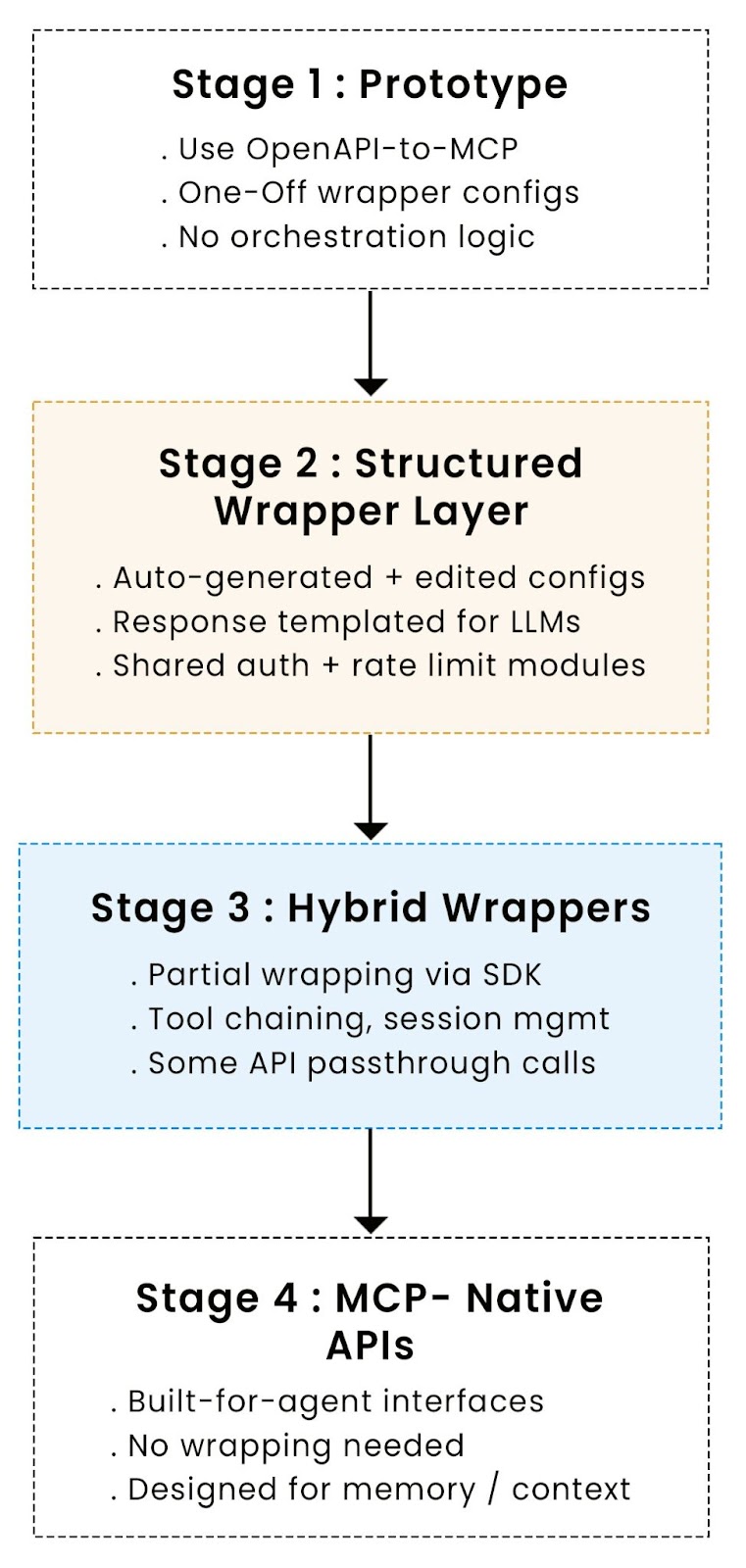

Feature evolution: Know when to enhance, when to rebuild

Early wrappers are often “just enough” to expose functionality. But over time, the needs change:

- Agents may require more semantic clarity

- Business logic may shift closer to the wrapper

- New API endpoints may be better served by dedicated tools or context-aware patterns

Guidance:

- Aggregate or refactor tools when agents need better abstraction

- Avoid putting too much orchestration logic in wrappers, that’s often a signal to rebuild natively

- Watch for tools that get overused or misused; they may need redesign

Migration strategy: From wrapper to native MCP, on your terms

The wrapper doesn’t have to last forever. In many orgs, it acts as a bridge until native MCP-aware systems are built.

If the wrapped layer is stable and predictable, you can gradually:

- Move business logic out of wrappers and into core services

- Replace wrapper-generated tools with first-class MCP services

- Retire patch templates, transforms, and workarounds

A good wrapper sets up a clean migration path, without locking you into short-term decisions.

Wrapping MCP around an API: When speed meets strategy

You started with a mature, stable API, something your team has invested years into. The challenge is making it usable by AI agents without tearing it all down. MCP wrapping offers a practical answer: it lets you expose existing functionality to structured agents quickly, safely, and without disrupting your backend.

We’ve covered how to decide if wrapping is the right move, how to choose the best architecture pattern, which tools like openapi-to-mcpserver can accelerate your setup, and how to avoid common pitfalls from brittle specs to inconsistent auth. Once you have your MCP wrapped API, you can point Claude to your MCP endpoint and easily prompt Claude to perform tasks like support ticket creation.

If you’re ready to bring your APIs into the agent world, here’s what to do next:

- Evaluate your existing APIs: If they’re versioned and documented, wrapping may take less time than you think.

- Prototype a wrapper: Try out a tool like openapi-to-mcpserver on a small surface area and see what structured agent access feels like.

- Read more: Dive into our blog post on mapping APIs into MCP tools for a closer look at tool design.

- Explore Scalekit: Our platform helps you manage, version, and evolve MCP wrappers across services. From schema governance to auth modules to agent observability, Scalekit gives you the infrastructure to scale AI-native APIs cleanly.

Next steps

MCP wrapping stands out as a powerful strategy for exposing your existing APIs to LLMs and AI agents, enabling them to interact with external systems in a standardized, scalable way. By following best practices for API endpoint configuration, security, and safety, you can build robust MCP wrappers that unlock AI muscle—improving customer support, automating IT workflows, and streamlining internal operations without the need for a complete backend overhaul.

As your organization explores MCP wrapping, stay engaged with the latest advancements in the field. Experiment with new use cases, refine your MCP server and tool definitions, and continuously monitor for opportunities to enhance your AI integration. Whether you’re just starting out or looking to scale your AI-powered operations, embracing MCP wrappers is a key step toward intelligent automation and future-ready infrastructure.

Don’t wait to rebuild everything; start by wrapping what works. And when you’re ready to operationalize it at scale, Scalekit can help.

FAQ

1. How do I handle dynamic query parameters or polymorphic request bodies in MCP wrappers?

MCP tools require statically defined inputs, so highly dynamic query shapes or polymorphic payloads (e.g., oneOf schemas in OpenAPI) need to be flattened or normalized in the wrapper layer. One approach is to expose distinct tool functions for each variant. Another is to use wrapper SDKs that let you pre-process the agent input and map it to the correct backend shape dynamically. Auto-generated tools often require manual editing to handle these edge cases safely.

2. Is it possible to chain multiple API calls within a single MCP tool?

Yes, but not with generator-based tools alone. For that, you’ll need to build custom wrappers using an SDK (e.g., mcp-wrapper-sdk) that lets you define multi-step logic in code. This is especially useful when APIs require a search-then-select pattern, or when business logic spans multiple endpoints. Just ensure your wrapper handles timeouts, retries, and error mapping clearly, or agents may struggle to recover from mid-chain failures.

3. What performance impact does an MCP wrapper introduce, and how do I mitigate it?

The main overhead comes from added serialization (e.g., converting agent calls to HTTP requests), response formatting, and sometimes redundant data reshaping. For critical paths, mitigate latency by:

- Using internal routing rather than external HTTP calls (if on the same infra)

- Caching common responses (especially for GET endpoints)

- Trimming large or verbose payloads in the response template

Also, monitor tool-specific metrics, not just your underlying API, to catch bottlenecks earlier.

4. How do teams manage MCP wrappers across multiple services?

Keep each wrapper version-controlled alongside its API. Assign tool ownership to individual teams, and use shared templates for headers and security to stay consistent. Validate changes with schema diffs before deploying, especially in multi-team environments.

5. What’s the right way to handle authentication in wrappers?

Inject static keys using requestTemplate.headers. For OAuth or dynamic tokens, use SDK logic or gateways that attach tokens at runtime. OpenAPI-based tools can convert defined security schemes automatically. Always separate credentials by environment and avoid hardcoding secrets.

Want to enable AI agents to use your API without rewriting it entirely? Sign up for a Free Forever account with Scalekit and wrap MCP around your existing endpoints seamlessly. Need help designing your wrapper or choosing the right patterns? Book time with our auth experts.

.webp)