How to map an existing API into MCP tool definitions

You have 50 REST endpoints. Now what?

You’re staring at a REST API with over 50 endpoints. It powers an internal productivity platform used by multiple teams, with features like projects, tasks, comments, user roles, notifications, and access control. The API was built incrementally over time, and now it’s your job to expose this entire system as a clean, composable set of MCP tools.

Not just wrap it, restructure it. You need to turn these endpoints into schema-driven, agent-compatible tools that can be composed, reasoned about, and reused across automations, no-code interfaces, or AI agents. That means mapping inputs and outputs cleanly, handling edge cases like batch updates and file uploads, and preserving auth and error behavior, all while keeping the tool layer maintainable over time.

This guide walks through that process end-to-end. You’ll learn how to analyze an existing REST API, map endpoints into MCP tools, reshape schemas, handle real-world edge cases, and implement the full tool layer with testable, maintainable code. We’ve built a realistic sample project, a minimal productivity tool API, designed to reflect common DevEx patterns like user management, task flows, and permissions. Every pattern and implementation shown here is grounded in this working example, not toy snippets.

Understand your REST API surface

You have an internal REST API that evolved over time, spanning users, tasks, roles, comments, and access control. Over time, it has expanded into over 50 REST endpoints that are now embedded into internal tooling and workflows. The task now is to expose this API as a structured layer of MCP tools, not just as wrappers, but as clean, composable, schema-driven capabilities that agents and orchestrators can safely use.

This transformation isn’t mechanical. REST endpoints organize behavior around HTTP verbs and paths. MCP tools organize around named actions with structured inputs and predictable outputs. This shift turns low-level HTTP behavior into agent-compatible, schema-defined actions. If you’re layering MCP on top of an existing service, see wrap MCP around an existing API for design patterns before you start mapping.

Group endpoints by resource and action intent

Start by categorizing the API into logical domains such as users, tasks, comments, roles, and projects. Then, for each domain, identify the kinds of operations exposed: fetching individual records, listing collections, creating new entities, updating fields, or deleting records.

For example:

- GET /users/{id}, POST /users → becomes the user tools group

- GET /projects, PATCH /projects/{id} → becomes project tools

- POST /comments, DELETE /comments/{id} → becomes comment tools

This early classification sets the foundation for tool naming (e.g., get_user, list_projects) and reveals shared object types (e.g., User, ProjectSummary) that can be extracted into common schemas. It also helps flag atypical endpoints early, such as file uploads, batch operations, or nested workflows that will need special treatment in later stages.

MCP tools represent capabilities, not routes

Every MCP tool corresponds to a single, self-contained capability. It does not directly mirror an HTTP method or route. Instead, each tool expresses its purpose in code and metadata, allowing it to be discovered, composed, and executed independently.

Each tool definition includes:

- name: A consistent, verb-style identifier like get_user

- description: A plain-language explanation of the tool’s function

- inputSchema: A single flattened object combining all relevant parameters, path, query, and body

- outputSchema: A predictable response format used across tools

- handler: The stateless function that implements the logic

- prompt (optional): A user-facing string for use by agents or no-code builders

Tools may be grouped internally by resource, for example, user-tools.ts or task-tools.ts; however, this grouping is for maintainability purposes and is not part of the MCP runtime. The interface exposed to agents is flat and action-oriented.

Translate OpenAPI specs into usable tool schemas

Most teams begin with an OpenAPI specification. This is a solid foundation, but to create MCP tools, you’ll need to transform that spec into agent-friendly schemas.

Here’s a typical example:

From OpenAPI:

Becomes MCP tool:

During this transformation:

- Flatten all request inputs into a single inputSchema, regardless of where they come from (path, query, body)

- Mark required fields explicitly to avoid silent failures or inconsistencies

- Reshape responses into standard output objects with well-defined types

- Avoid leaking HTTP conventions. MCP tools don’t rely on status codes, headers, or REST-style naming

This step ensures that each tool’s inputs and outputs are self-documenting and ready for chaining or reuse in automated flows.

Design tools for predictability and reusability

The most powerful tool layers aren’t those that expose every endpoint; they’re the ones that developers and agents can predict without reading the docs. That means prioritizing consistency across the entire tool surface.

Follow these principles:

- Stick to naming conventions: Use get_, create_, update_, delete_, list_ consistently

- Share schemas: Use common object types (like User, Task, or CommentSummary) wherever possible

- Avoid overloading tools: If update_user and verify_user do different things, they should be separate tools

- Normalize field names: Always use userId, not a mix of id, uid, or user_id across tools

MCP tools are meant to be long-lived interfaces. They should feel predictable and interchangeable. If someone understands get_user, they should already have a good idea of what get_project or get_task will look like.

Map endpoints into single-purpose tools

Systematically map endpoints into focused, reusable MCP tools

Once your API is grouped by resource, the next step is to convert each endpoint into a single-purpose MCP tool. This isn’t a renaming exercise. Each tool should model a meaningful capability that can be reused across agents, automation flows, and developer UIs.

In this section, we’ll cover the common patterns:

- CRUD operations

- Filtering and search

- Bulk operations

- File uploads/downloads

- Authentication tools

- Webhook-style flows

We’ll focus on the cases where tool structure meaningfully differs. For similar patterns, we’ll show one and describe the rest.

CRUD endpoints become composable tool definitions

Most internal APIs expose standard Create, Read, Update, and Delete (CRUD) operations across resources, such as users, tasks, or projects. Each operation is mapped directly to a single tool, following a predictable structure.

Read-by-ID: GET /users/{id} → get_user

This is the foundational pattern. A path parameter becomes an input field. The response body becomes the output schema. Once your definitions are in place, follow tool calling to wire the request and response flow and return typed results.

The same pattern applies to other entities, such as get_task, get_project, and get_comment, among others. Only the field names and output structure change.

Listing items: GET /users → list_users

List tools expose pagination and filters directly in the input schema. Avoid hardcoded defaults or undocumented query logic.

This structure is reusable for any resource collection, such as list_tasks, list_projects, etc.

Creating records: POST /users → create_user

Create tools that use request body fields as input and return either the created object or its ID.

Other tools like create_task or create_project follow the same structure, with different fields and validations.

Updating records: PATCH /users/{id} → update_user

Update tools follow the same pattern as create tools, but all fields are optional. The target ID is passed explicitly.

This pattern applies to any partial update, such as update_task or update_project_settings.

Deleting records: DELETE /users/{id} → delete_user

Deletion tools are similar to read-by-ID tools but may include additional flags. For example, if both soft and hard deletes are supported:

This avoids surprises and gives agents full control over deletion behavior.

Filtering, batching, and binary operations require special handling

Internal developer tools often involve more complex workflows, such as filtering across fields, handling multiple objects simultaneously, or processing binary data. These patterns still map cleanly into tools with the right schema structure.

Filtering/search endpoints: GET /tasks?status=open&assignedTo=123 → search_tasks

If filtering logic is more complex than simple lists, model filters as first-class input fields.

Tools like search_comments or search_projects can follow this format with adjusted fields.

Batch operations: POST /tasks/bulk → create_tasks_batch

Bulk tools require array inputs and must define how partial errors are handled, either by rejecting the entire batch or returning results for each item.

This pattern also applies to bulk updates or deletes; just adapt the item shape and handler behavior.

File uploads/downloads: POST /attachments/upload, GET /attachments/{id}/download

Because tools can't transmit binary data directly, the standard pattern is to return a signed upload or download URL.

Upload:

Download:

This decouples binary transfer from core logic and works well with cloud storage integrations.

Authentication flows become standard tools

Login and token refresh are stateless tools. They take credentials as input and return tokens, refresh tokens, and expiry timestamps in the output schema.

Reshape specs into tool-ready schemas

OpenAPI specs need reshaping before they’re usable in tools

Most internal APIs come with OpenAPI specs, a helpful starting point. But OpenAPI is designed around HTTP routes, not schema-driven capabilities. MCP tools require tighter specifications: flattened inputs, explicit types, consistent output structures, and error behavior that’s machine-readable. If you are defining your first tool, start with the tools overview to see how names, parameters, and results are structured.

This section guides you through reshaping OpenAPI specs into fully defined MCP schemas, without leaking HTTP concepts or relying on implicit assumptions.

Each endpoint becomes a tool with flattened input/output

Let’s start with a basic OpenAPI operation:

This becomes a tool like:

Notice the key changes:

- Path/query/body inputs are merged into a flat inputSchema

- Parameter names are renamed for clarity and consistency (id → userId)

- Response content becomes a structured outputSchema

- HTTP response codes are removed; tools always return structured data or throw

This flattening step makes tools easier to compose, test, and validate, both by humans and agents.

Use JSON schema conventions, but fill in the missing pieces

OpenAPI uses JSON Schema internally, but often omits critical details. For MCP tools, those gaps must be closed explicitly.

Type mapping

Optional fields: Make field optionality explicit. Use optional: true flags or required arrays to avoid ambiguity.

Enums: Preserve enums directly. They help agents and UIs expose valid values predictably.

Handle nested objects and arrays with full structure and validation

For nested structures, such as a list of tasks in a project, define full schemas that include required fields, array constraints, and nested validations. Avoid loosely typed object blobs.

Define success responses directly, no more 200s

Tool outputs should be direct objects, not nested under response codes. Just define the outputSchema as a flat object with typed fields like projectId or createdAt.

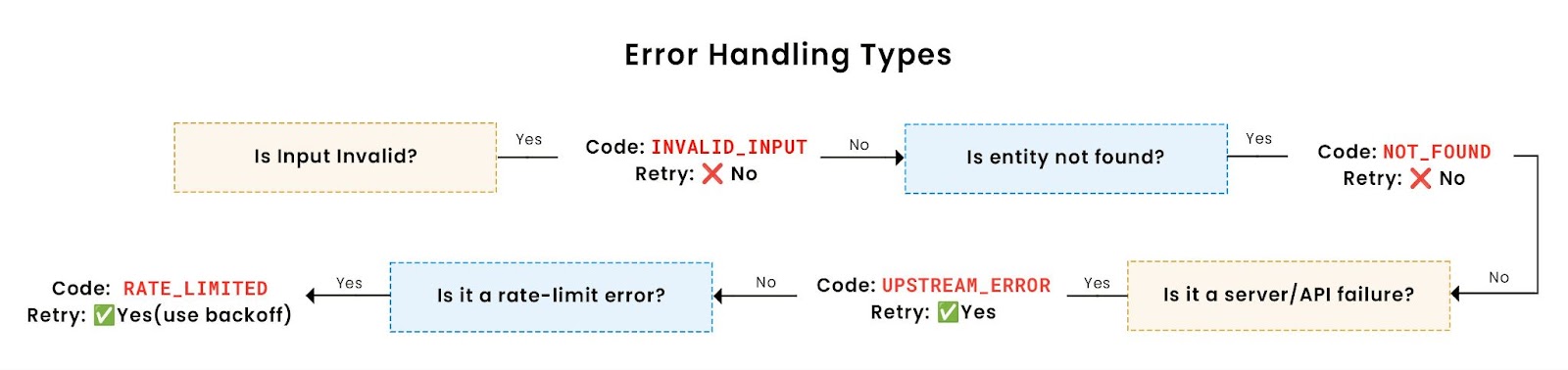

Normalize errors into predictable formats

Errors should be structured: include a code, message, and optionally a retryable flag. Avoid leaking raw exceptions or HTTP codes. Common error codes include: NOT_FOUND, INVALID_INPUT, UNAUTHORIZED, RATE_LIMITED. Here’s a standardized breakdown of common error types and how to handle them:

Model pagination explicitly in input/output schemas

Declare pagination fields directly in tool schemas. Use offset/limit or nextCursor depending on the model your API supports. Always expose the pagination structure in both input and output.

Metadata should be structured, not leaked from HTTP headers

Only include metadata fields like version or modifiedAt when they affect how downstream systems process the response. Don’t expose low-level HTTP headers unless they’re meaningful to tool consumers.

At the end of this stage, your schemas should be fully tool-ready

Each tool should now have the following.

- A flattened, self-contained inputSchema

- A clear, typed outputSchema

- No references to HTTP semantics

- Explicit error handling structure

- Validated nested fields and arrays

- Optional pagination or metadata if required

This structure makes tools consistent, testable, and composable, and avoids surprises when used in workflows, no-code apps, or AI agents.

Model flows, retries, and auth cleanly

Real-world APIs go beyond single calls

In an internal developer platform, many operations span multiple steps. Creating a task might involve validating user permissions, checking project membership, and notifying assignees. Assigning a role could trigger multiple policy updates. These aren’t edge cases, they’re the norm in real systems.

This section covers how to represent multi-step workflows, stateful sessions, authentication, and external constraints, such as rate limits, using MCP tools. You’ll see how to model these patterns cleanly while keeping tools stateless, predictable, and easy to test.

Learn more - Wrap MCP Around Existing API

Model multi-step flows using composable or compound tools

There are two ways to handle operations that require multiple API calls.

- Expose each step as an independent tool: Expose multi-step flows as small tools, such as validate_project_access, create_task, and notify_assignees. This keeps logic transparent and composable.

- Wrap the entire flow in a single higher-level tool: Use transactions or idempotency to avoid partial state. If your backend supports transactions, use them inside the handler. Otherwise, support deduplication via idempotency keys or staged writes.

Design tools to be stateless, even when sessions exist

For session-based APIs, accept tokens in the inputSchema and validate them explicitly. Stateless design means no assumptions about prior calls.

Expose authentication flows as standalone tools

Treat authentication as just another tool authenticate_user and refresh_session_token. They return tokens and expiry values, and all other tools should accept these tokens explicitly. All other tools that require auth should accept the token as input. Never rely on global state or headers.

Respect rate limits and expose them in tool behavior

Raise a structured RATE_LIMITED error when hit by 429s, optionally including retryAfterMs for callers to handle gracefully.

Add backoff and retry inside the handler for safe cases

For safe, idempotent tools, you can add backoff-and-retry logic in the handler. Avoid retries for tools that mutate state unless your backend supports deduplication.

Queue background jobs with tool-acknowledged handoffs

Queue-backed tools should return an exportId and status like queued. Pair them with a status-checking tool like get_export_status.

Handle access control and scoped capabilities at the tool level

Apply access control at the tool level. Validate roles explicitly using the provided token, and raise FORBIDDEN errors for unauthorized actions. Do not assume upstream access control will always catch this. Tool boundaries are the last guardrail, and the most visible to the consumer.

By handling sessions, retries, flows, and auth explicitly, your tools become robust under real-world usage, not just in ideal test cases. These patterns also make tools easier to monitor and extend later, which we’ll cover next in the implementation and deployment walkthrough.

Implement tools using clean, testable code

You’ve now mapped your internal API into a clean set of MCP tools. But schema design is only half the picture. You still need a reliable, testable codebase that actually implements those tools. This section walks through a real project built around an internal developer platform, using real tools like get_user, create_project, and list_tasks, to show how to structure, register, and execute MCP tools cleanly. You can try out the full project at mcp-api-demo.

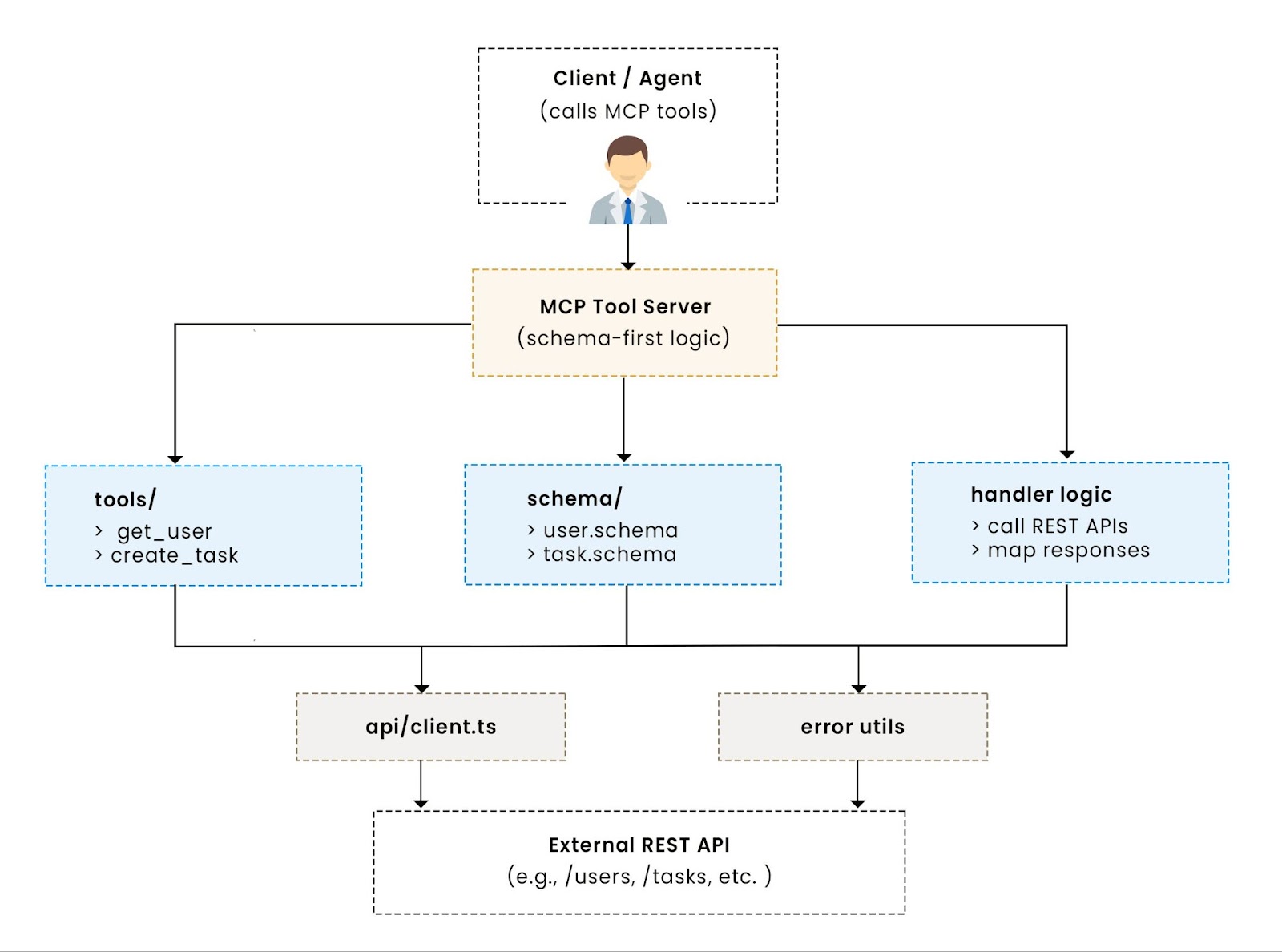

Project uses modular structure with shared schemas and mock backend: The codebase follows a simple modular layout to keep schemas, logic, and mocks separated:

This structure mirrors your API domain (users, tasks, projects) while keeping tool handlers decoupled and composable.

Tool definitions are pure functions with schema-first design

Each tool defines a single action, using inputSchema, outputSchema, and a stateless handler. Here’s a real example from your project:

The get_user tool looks like this:

The create_user tool is defined similarly:

Validation uses shared Zod schemas from schemas/shared.ts.

Tools are registered via a central entry point

The server.ts file simply imports all defined tools and logs them for confirmation:

This keeps tools modular; add new tools without changing infra.

Tools run against a mock backend that behaves like real data

Your project uses mockDB, an in-memory object store, to simulate users, tasks, and projects:

Because all tool handlers are stateless and side-effect free, this backend makes it easy to test behavior without depending on external systems.

Schema reuse keeps tools consistent and predictable

Rather than redefining every field inline, shared schemas handle validation and normalization:

For example, both create_project and create_user derive inputs from these schemas using .pick() or .omit().

Pattern: Tool logic is self-contained and testable

Tool handlers are pure functions, with no state and no side effects, making them easy to test and run anywhere.

Even tools like create_project, which perform validation and aggregation, follow this pattern:

Errors are consistent, data is deterministic, and the output can be trusted. This project powers every example in this guide, from auth to error handling. In the next section, we’ll test these tools for correctness and reliability.

Testing and validation of MCP tool implementations

A well-defined MCP tool is only reliable if its implementation is thoroughly tested. Since tools expose structured interfaces, with defined schemas, error codes, and deterministic behavior, they lend themselves naturally to unit and integration testing.

Tests are written using Vitest, with full coverage for logic, schemas, and error behavior.

Unit testing each tool handler ensures predictable logic

Each tool has an associated unit test that verifies:

- Correct behavior with valid inputs

- Proper handling of invalid or missing fields

- Accurate error codes and messages

- Side effects (like record creation or mutation)

For example, here’s your test for get_user:

These tests confirm not only that the handler works, but that the error behavior is structured and machine-readable.

Schema validation is enforced through shared Zod objects

Schema validation is enforced via Zod. For example, create_user throws on duplicate emails:

This ensures invalid data doesn’t leak into the system, even if the caller uses the tool incorrectly.

Mock backend makes test setup fast and isolated

Tests reset the in-memory mock database before each run:

This ensures a clean slate for every test case, eliminating the need to mock network calls or spin up external services. It also enables fast and deterministic test execution.

Example: Testing failure cases in create_project

Each case ensures the tool is safe under invalid input and edge scenarios, a requirement if agents or non-developers will consume the tool.

Integration tests can simulate end-to-end behavior

Your handler-level tests can be extended to simulate full workflows. For example:

This kind of flow testing ensures schema compatibility and flow correctness across tools.

Regression tests protect schema contracts from drift

Regression tests prevent schema drift, field renames, error changes, or structural mismatches. Snapshotting outputs or linting schemas can catch changes early in CI pipelines.

MCP tools are contracts. Testing their behavior under all conditions keeps your system reliable and safe to evolve. In the next section, we’ll cover how to move these tools into production with confidence, including deployment models, scaling, and observability.

Deployment and monitoring for MCP-based tool servers

Your MCP tool layer is schema-driven, stateless, and testable, which means deployment doesn’t need to be complex. But it does need to be reliable.

This section demonstrates how to deploy your tools using lightweight patterns (such as containers or serverless functions), configure environments cleanly, and monitor tool health in production. You’ll also see how to catch errors, spot performance issues, and keep tools behaving consistently over time.

Deploy tools as a stateless server, agent runtime, or function

You can deploy tools using three main patterns:

- Lightweight Node server: Wrap tools in a minimal HTTP server using Express or Fastify. Useful for CLI clients, agents, or manual use.

- Serverless functions: Package each tool as a standalone function (e.g., AWS Lambda or Vercel) for automatic scaling and pay-per-use efficiency.

- Agent-side runtime: Embed tools directly in an LLM or workflow engine without exposing a network layer. Pass tools directly to the toolExecutor.

Use environment-based config for deployments

Separate environments may require:

- Different database backends

- API endpoints

- Auth tokens or credentials

Use .env files to inject API base URLs, secrets, or feature flags into runtime. Tools should never hardcode environment-specific logic.

Add logging around handler execution

Wrap each tool execution with logs for start, success, and error. Log arguments, results, and duration using a structured logger like Pino or Winston.

This provides:

- Traceability for debugging

- Auditability for tool calls

- Context for user- or session-level analysis

You can also enrich logs with:

- Execution duration

- Tool version or deployment ID

- Environment (e.g., staging, prod)

Use a structured logger like Pino, Winston, or Bunyan for log aggregation.

Monitor core metrics for production observability

Whether humans, agents, or systems use tools, they need to be observable. Key metrics to collect include:

Alert on error spikes or degraded performance

Set up basic alerts to catch production issues quickly:

- Tool error rate > 5% for more than 1 minute

- Median execution time > 500ms across any tool

- Unhandled errors or unknown codes

You can also log unrecognized error shapes or retryable failures to catch regressions early.

Add distributed tracing if tools call upstream APIs

For tools that involve multiple steps or upstream API calls, add distributed tracing with OpenTelemetry or Datadog. Wrap handler internals with spans like validate_user, lookup_tasks, or persist_project to diagnose latency or failures.

Use CI to test tools before every deploy

Use CI to test handlers, validate schemas, lint tool names, and ensure no tool is missing a schema or description before deployment. With these patterns in place, your tools are safe to deploy, monitor, and evolve whether powering workflows, UIs, or autonomous agents.

In the final section, we’ll examine how to evolve tools safely over time, including versioning schemas, migrating changes, and maintaining stable long-term interfaces.

Maintaining and evolving tools without breaking contracts

Once deployed, MCP tools become part of larger systems, internal orchestrators, automation flows, or agent toolchains. This means you can't safely rename inputs, change outputs, or remove fields without careful planning. Even a small schema tweak can silently break downstream consumers.

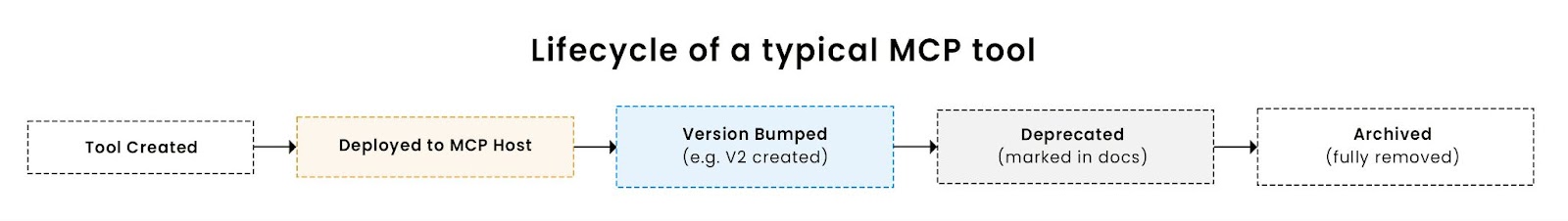

The diagram below summarizes the full lifecycle of a typical MCP tool from initial release to eventual deprecation or removal:

This section explains how to evolve your tool layer without introducing regressions. It covers versioning, schema strategy, safe deprecation, and performance optimizations, all drawn from how internal developer APIs typically evolve in production.

Use semantic versioning to manage schema changes

Tool schemas are contracts. Even adding an optional field can impact behavior for agents, tests, and downstream tooling. Adopt semantic versioning to communicate the risk and scope of changes.

- Patch version (1.2.3 → 1.2.4): Safe refactors that don’t change the input/output contract (e.g., performance fixes, logging improvements).

- Minor version (1.2.3 → 1.3.0): Additive changes that don’t break existing usage (e.g., optional fields, new metadata).

- Major version (1.2.3 → 2.0.0): Breaking changes (e.g., renamed fields, deleted outputs, changed validation).

For breaking changes, prefer defining a new tool (create_user_v2) instead of modifying the original. This protects consumers who depend on stable behavior.

Design schemas with change in mind

A schema that evolves well does not assume too much up front.

- Favor optional fields: It’s safe to add new optional fields later. Making something required later will break clients.

- Use consistent field names across tools: Stick to names like userId, taskIds, projectId across schemas to simplify mental models and tool chaining.

- Avoid fragile array structures: Don't rely on array index positions for meaning unless necessary.

- Reserve metadata fields: Leave room in output schemas for expandable objects like meta, which can be extended later.

These habits make it easier to grow your tool layer without major rewrites.

Deprecate tools carefully, not aggressively

When a tool becomes obsolete, for example, if create_user is replaced by a more robust version, don’t remove it immediately. Instead:

- Mark the tool as deprecated in its description

- Link to the replacement tool (e.g., “Use create_user_v2 instead”)

- Retain the existing schema and handler for now

- Monitor usage before scheduling removal

Many systems may still depend on older tools. Removing them too quickly can break orchestrations and lead to runtime failures. If you do plan to remove a deprecated tool, communicate clearly and version your tool server accordingly.

Optimize performance without changing observable behavior

Even when inputs and outputs don’t change, the tool’s internals can evolve to improve performance. Do so cautiously, ensuring behavior remains consistent.

Common optimizations:

- Response caching: Tools like get_user or list_tasks can return cached responses when input args match.

- Connection pooling: If calling external services, reuse HTTP clients or database pools to reduce latency.

- Batching variants: If you call get_user in a loop, consider offering get_users_batch to reduce roundtrips.

- Lazy hydration: Delay fetching nested data unless requested explicitly via a flag like expandDetails: true.

Always measure tool performance before and after the change to ensure the desired result. Keep schema outputs and error behavior identical to avoid unintentional regressions.

Keep tools stateless and side-effect free

MCP tools are designed to be reusable and context-independent. Avoid introducing hidden state or memory leaks.

- Don’t store data in globals or cached objects

- Always clear timers or streams used during execution

- Use inputs to pass all context (e.g., sessionToken, environmentId)

This ensures tools behave predictably when reused by different agents, workflows, or automation layers.

Turn legacy endpoints into reliable, composable tools

In this guide, we walked through how to turn an existing REST API into a maintainable MCP tool layer. You saw how to group endpoints by intent, reshape OpenAPI schemas into clean inputs and outputs, and model common patterns like batching, filtering, auth, and error handling, all grounded in working code.

This structure isn’t just about naming or abstraction. MCP tools act as a long-term contract between your backend and anything built on top, agents, orchestrators, no-code platforms, or custom UIs. A clean tool layer makes those systems safer, faster, and easier to extend.

If you already have a Node project with tool definitions, the next step is to expose them to MCP Hosts. That means either deploying your tool registry as an HTTP server or bundling it into a runtime that the MCP ecosystem can call. You can use the example project in this guide as a starting point, fork it, extend it, and adapt it to your needs.

FAQ

1. How should I handle shared validation logic across multiple MCP tools?

Shared validation logic (like email format, date ranges, or user ID checks) should be encapsulated in reusable schema definitions or helper functions. Use a schema library like Zod or Joi to define common objects (User, Project, TaskInput), and import these into each tool’s inputSchema or outputSchema. This ensures consistency across tools and reduces the risk of divergence as your API evolves.

2. What’s the best strategy for integrating authentication and authorization into MCP tool handlers?

Avoid global auth middleware. Instead, make authentication explicit in each tool by accepting tokens or user context in the inputSchema. Inside the handler, verify the token and enforce permission checks locally. This ensures tools remain stateless, testable, and secure even when reused in different contexts (e.g., agent runtime, no-code flow, backend orchestration).

3. How do I avoid performance regressions when evolving tool internals?

Before optimizing any tool handler, write regression tests that validate outputs under known inputs. Use benchmarking to measure execution time before and after changes. If you introduce caching, lazy loading, or batching, make sure that outputs and error behavior remain identical. Also, consider instrumenting each tool with timing logs or Prometheus metrics to detect regressions early in CI or staging.

4. What’s the best way to structure tests for a large set of MCP tools?

Use a layered testing strategy:

- Unit tests for each tool’s handler, verifying inputs, outputs, and error behavior.

- Schema tests that validate each inputSchema and outputSchema against expected contracts.

- Integration tests that compose tools together to simulate full workflows.

Mock any external dependencies (DB, APIs) to keep tests fast and deterministic. Reset state between tests to ensure independence.

5. How should I handle downstream failures in tools that call external APIs?

Wrap external calls with retry logic and circuit breakers where safe (e.g., for idempotent GETs). Structure errors using a predictable format include a code, message, and optionally retryable or retryAfterMs. Avoid leaking raw HTTP or exception traces. If an upstream service is down, tools should fail gracefully and return actionable error messages so orchestrators or agents can respond intelligently.

Ready to turn your REST APIs into composable, agent-friendly tools? Sign up for a Free Forever account with Scalekit and start exposing your endpoints as MCP tools with full auth, error handling and schema flattening. Want help designing tool schemas or mapping complex APIs? Book time with our auth experts.