Tool calling authentication for AI agents

TL;DR

- Tool calling: AI agents securely call external tools using scoped tokens and delegated authentication and authorization, bypassing login redirects and user sessions. These tool calls interacts with the tool functions and receive tool call results in the form of structured function responses.

- Agent-specific tokens: Each agent receives a unique, scoped token via one-time user consent, ensuring granular permission control and clear audit trails for agent actions. These tokens are mapped to tool schemas to ensure secure interaction and are tied to agent identity.

- Token rotation: Tokens are dynamically issued with minimal TTL and auto-rotation, ensuring agents don’t hold long-lived credentials vulnerable to compromise. Tool call arguments are securely handled in this process for secure AI agents operating at scale.

- Credential management: Agents fetch credentials on demand for each tool, avoiding hardcoded secrets and ensuring security across workflows so your secure AI agents remain stateless and auditable. Each tool call ID is logged to track the tool result for future reference.

- Audit logging: Structured logs with correlation IDs, immutable audit trails, and real-time monitoring ensure compliance and facilitate forensic analysis of agent actions. These logs capture tool call IDs for tracking tool schemas and tool call results, and can be surfaced through an MCP server when tools are exposed via the MCP protocol.

Why tool calling breaks authentication

AI agents can’t use standard login flows when calling external APIs

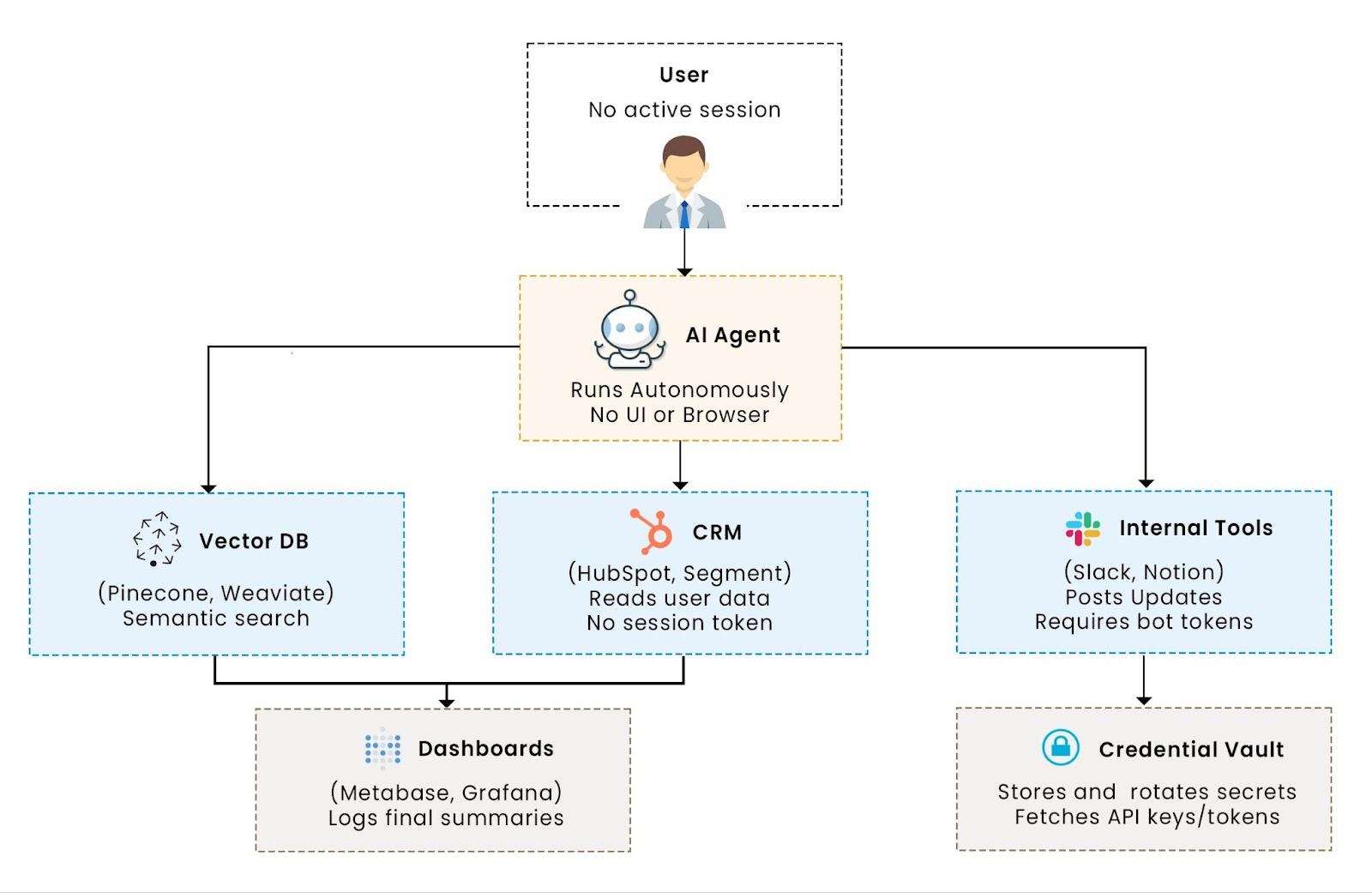

Modern AI agents don’t just generate text; they take actions. Tool calling is a method by which agents invoke real-world functionality, such as calling APIs, querying databases, posting updates to SaaS applications, and chaining those steps together into workflows. Unlike traditional user-triggered flows, tool-calling agents operate independently, retrieving customer data, enriching profiles, sending alerts, or updating dashboards, all without requiring human input or UI clicks. These actions can be automated with prebuilt tools and model providers that integrate through tool call arguments and structured authentication flows.

Autonomous AI agents are moving beyond chat interfaces. They now perform actions across APIs, query databases, and interact with SaaS tools, all without requiring user intervention. To ground the concepts in this guide, let’s walk through a hypothetical agent called SupportBot. It’s designed for customer operations and automates tasks like pulling CRM data from Salesforce, running embeddings on historical tickets in Pinecone, posting follow-ups in Slack, and updating dashboards in Metabase, all without human involvement at each step. It does this with no human present to initiate access, which means the authentication flows cannot depend on browser redirects.

That’s a sharp break from traditional app flows. When a user interacts with a ChatGPT plugin, the plugin makes a one-time call to your API, such as fetching a document or triggering a webhook, using the user’s session and permissions. But when an agent runs in the background, it doesn’t just make one call. It orchestrates a chain: querying a vector database like Weaviate, pushing updates into tools like Notion or Slack, fetching customer profiles from Segment, and logging results into a business intelligence tool like Metabase. And it does all of this without any human present to approve or initiate access. These interactions are facilitated through tool schemas and structured response formats, ensuring consistency.

This is what makes tool calling different and why it breaks traditional authentication.

OAuth was designed around human-in-the-loop consent. The user logs in. The browser redirects. The user approves scopes. This works for web apps. For example, when you connect your Google account to a fitness app, a redirect flow grants access to your calendar. But agents don’t have browsers. They don’t wait for buttons to be clicked. A data enrichment agent won’t stop and ask a human before retrieving contact info from Clearbit or updating CRM entries; it needs credentials ahead of time.

They need delegated, secure, and autonomous ways to authenticate across tools.

This guide breaks down the architectural and security challenges in tool-calling authentication. This guide breaks down how tool-calling agents authenticate safely using delegated credentials, scoped tokens, and secure vaults across APIs, services, and databases, even when no user is present.

Understanding tool-calling flows

Tool-calling agents interact with multiple APIs, often without user presence. In a tool-calling workflow, an AI agent doesn’t just make one API call; it runs multi-step processes across fundamental tools. For example:

- It might query a vector database like Pinecone or Weaviate to retrieve semantically similar documents.

- Then fetch customer records from a CRM like Salesforce or HubSpot.

- Post updates into Slack or Notion to alert internal teams.

- And log summaries into dashboards using Metabase or Grafana.

Each of these tools has its own authentication model, and most weren’t built with autonomous agents in mind. These tools respond with tool call results formatted with tool schemas, which can also be exposed via an MCP server for consistent discovery and invocation.

That’s where tool calling diverges from traditional API usage. In a typical web app, a user clicks a button, and the app makes a single API call using a session token tied to that login. Tool-calling agents don’t have that context. They must authenticate and act on their own, across multiple services without user sessions or UIs. Each tool call ID tracks these interactions, helping organise and manage tool call arguments.

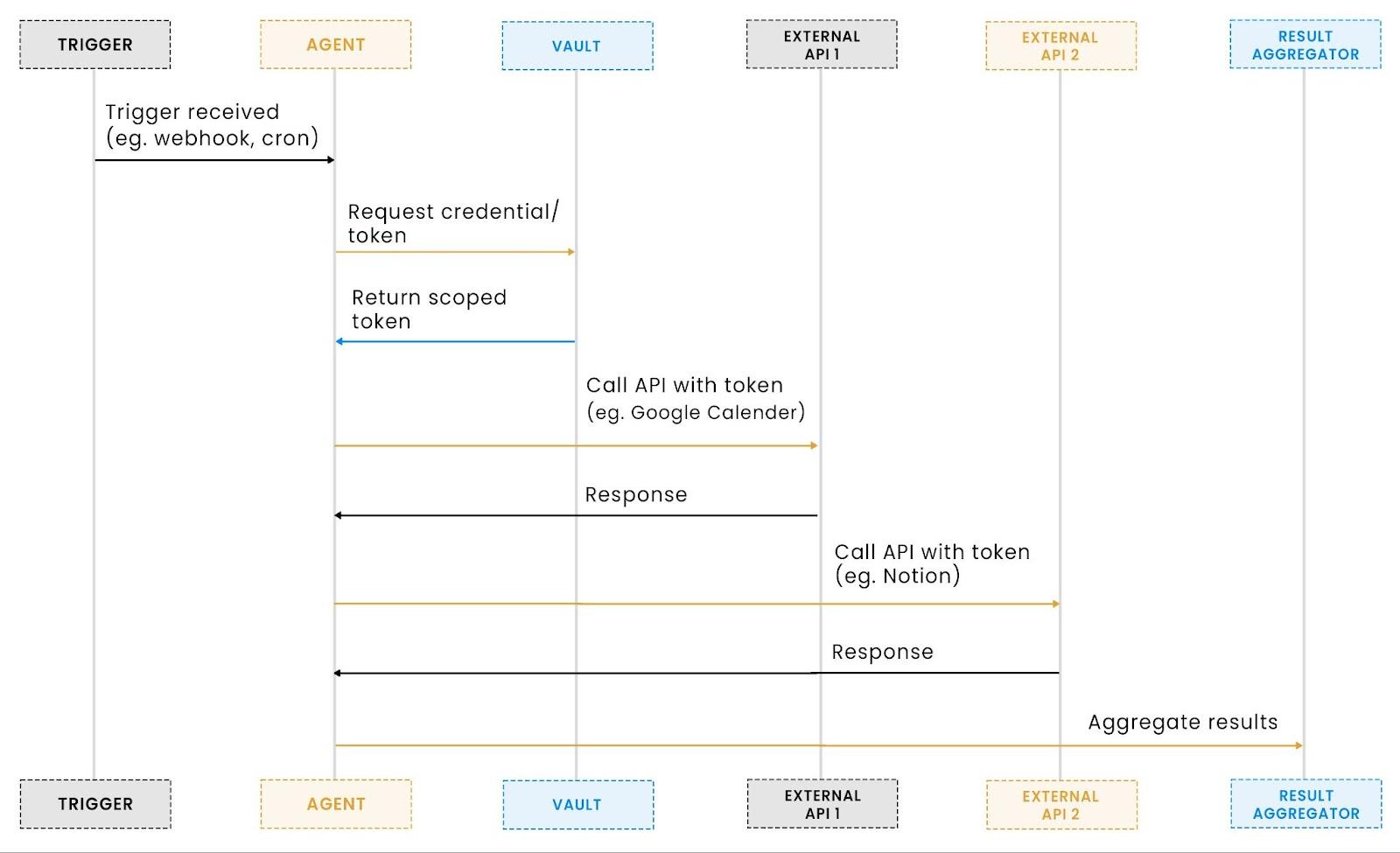

Agent tool calling workflow

Each API in that chain may require a different type of authentication: OAuth tokens, API keys, or signed credentials. The agent identity must be known to the credential system so that it can issue the right scoped token at the right time.

Code example: Agent making authenticated API call to external service

This snippet shows SupportBot posting a message to a Slack channel using an API token retrieved securely from a credential vault. Rather than hardcoding secrets or relying on a user session, the agent dynamically fetches the right token based on its identity (agentId) and target service (slack-bot-token).

This reflects a common pattern in tool-calling architectures:

- The agent is stateless and doesn’t hold long-lived secrets.

- Tokens are scoped to a specific service and purpose (in this case, posting to a support channel).

- Authentication is decoupled from the business logic, handled through a vault or credential broker.

By isolating token access from the actual API call, agents remain flexible, auditable, and secure across multi-tool workflows. This is the core pattern in nearly every agent-tool interaction. The hard part isn’t making the call, it’s managing the token. Next, we’ll look at the authentication patterns that make secure tool calling possible.

Authentication patterns for tool calling

Tool-calling agents can’t rely on browser-based login flows. Instead, they use machine-to-machine authentication methods that support autonomous access to external APIs. Depending on the service and the agent’s responsibilities, three common patterns emerge.

Service-to-service authentication: Some APIs allow agents to authenticate directly using static credentials. This includes API keys, client credentials (in OAuth 2.0), or signed JWTs. These flows don’t require user presence and are ideal when the agent is acting on its own behalf, not on behalf of a specific user.

Example: An analytics agent pulling usage metrics from an internal metrics API using a pre-issued service token.

Delegated authentication: When an agent needs to act on behalf of a user, it requires delegated access. This often uses OAuth access tokens that were initially authorized by a user and later used by the agent. The challenge is issuing these tokens without requiring repeated interaction, while enforcing authentication and authorization boundaries per task.

Example: A user grants access to their CRM account once, and the agent stores a refreshable token to pull customer updates or deal statuses later.

API key management

Even when APIs use static keys, agents still need a secure way to store and retrieve them. Hardcoding secrets or injecting them via environment variables creates security risks and audit gaps. A secure key vault or token service should handle storage, access control, rotation, and log how authentication and authorization decisions were applied per request.

Here’s an example of how an agent retrieves a scoped API key at runtime using a credential broker, without holding any secrets in memory:

What this does:

- SupportBot makes a single call to a credential vault, passing its own identity and task context.

- The vault returns a scoped, time-bound API key just for the service (in this case, Slack).

- The agent uses this key to make the actual tool call, then discards it. No secrets are hardcoded, and all access is tracked.

This is a pattern we’ve implemented in production with agents that call services like Slack, Segment, and internal APIs. It makes token handling auditable, minimizes blast radius, and keeps agents stateless.

In the next section, we’ll go deeper into token-based flows, especially for third-party services that require OAuth. You’ll see how agents manage refresh cycles, scope control, and failure recovery.

Token-based tool authentication

Many third-party APIs like Google, Salesforce, and HubSpot require OAuth tokens. In a typical app, users complete the OAuth flow interactively via a browser. But tool-calling agents can’t do that. They operate in the background. No UI. No redirect. No human-in-the-loop. Instead, agents rely on delegated access: a token granted once (via user consent), then reused securely behind the scenes. This delegated model preserves the separation of duties between business logic and credentials for secure AI agents.

Instead, agents rely on delegated access: a token is granted once (via user consent), then reused securely behind the scenes. For this to work safely, agents must enforce scoping, handle token lifecycles, and design for failure recovery.

Token scoping

Tokens must be scoped narrowly. For example, if an agent only needs to read CRM contacts, the token should not include write permissions, file access, or email scopes. To do this:

- When initiating the OAuth grant (e.g., via your IdP), define the exact scopes needed.

- On the server, map each agent task to a minimum required permission set.

- During delegation, retrieve only tokens pre-scoped for that task.

Here’s what that might look like:

Every token is issued with only the permissions needed for a single tool-call flow. The vault enforces which scopes can be requested based on the task and SupportBot’s identity.

Token lifecycle

Tokens expire. Agents can’t be allowed to fail just because a user isn’t online. So the refresh logic needs to be completely autonomous. Best practice: store refresh tokens in a secure vault or broker. When the agent needs access:

- It asks the vault for a valid access token.

- If expires, the vault silently refreshes it.

- The agent never sees the refresh token or manages the expiry logic.

End-to-end implementation example

This is a full flow where the agent fetches a valid token for HubSpot, makes a request, and handles expiry.

Key behaviors:

- vault.getScopedToken handles scoping, token expiry checks, and logs which agent asked for which tool.

- The agent is stateless; it doesn't store tokens.

- SupportBot handles token expiry or revocation in-flight (e.g., if the user revokes access).

- The API call logic remains clean and reusable.

By designing tokens this way, scoped, refreshable, and decoupled, you create a secure, resilient foundation for tool-calling across any external service.

In the next section, we’ll cover what happens when your agent needs to call multiple tools, each with its own token model, and how to manage that complexity with credential coordination.

Managing credentials across multiple tools

Agents often need to juggle multiple auth methods across services.

Real-world agent workflows rarely interact with just one API. A single task might involve calling Google Calendar, Salesforce, and Notion, each requiring different authentication methods, token formats, or credential types. The agent must coordinate these services securely in real-time. When interacting with multiple services, agents fetch credentials on demand, ensuring that only the correct tool schemas are used for each tool call result.

In this example, SupportBot retrieved tool call arguments from a centralized vault, ensuring each tool call ID is tied to the appropriate credential type and scope, maintaining security and proper credential rotation.

This complexity compounds fast. Some services expect OAuth tokens scoped per user. Others require static API keys. A few rely on service accounts, signed requests, or mTLS. The agent must coordinate them all securely and in real time.

Credential management

Agent workflows often span multiple tools, each with its own authentication method. One agent might query Pinecone with an API key, pull customer data from HubSpot using OAuth, and log results to Metabase using a service token. These methods are not interchangeable, and agents must be able to handle them all.

To do this securely, agents need a way to store and retrieve credentials at runtime, without hardcoding secrets or maintaining persistent sessions.

How agents manage credentials across services

Credentials are stored in a centralized vault. Each record links an agent to the tool, the credential type, and its scope. This allows the agent to request only what it needs, when it needs it.

Example structure in a credential vault:

In this example, support-agent-1 refers to SupportBot acting in the customer support domain. Each tool-specific credential is fetched using a simple interface, with secrets resolved internally by the vault or secret manager.

Agent workflow calling multiple services

Below is a sample implementation where an agent retrieves and uses credentials for three different tools. The agent does not store any secrets locally.

SupportBot fetches credentials on demand. Each call function handles the specifics of using the token or key for its respective API.

Handling authentication failures in multi-tool workflows

If a token expires or a key is revoked, the agent should catch that failure without stopping the entire workflow.

Example of how an individual tool call handles an expired token:

This structure allows for service-level retries, fallbacks, or re-auth flows. Errors are caught per service, not globally, so one failure does not cancel the full process. By centralizing credential management, tagging credentials by type and scope, and isolating error handling per tool, agents can coordinate complex multi-service workflows securely and reliably.

In the next section, we’ll look at how to apply these patterns securely across different environments.

Learn more : Token Vault: Why It’s Critical for AI Agent Workflows

Security considerations

Secure authentication isn’t just about getting tokens; it’s about controlling and observing their use: When agents handle credentials to access external tools, the risks go beyond token theft. Improper scoping, leaked logs, or invisible usage patterns can lead to silent failures or serious breaches. To prevent this, tool call results are logged with correlation IDs for each tracking, ensuring proper security practices are followed throughout the tool calling process. Designing observability and audit from day one is essential for secure AI agents.

By establishing immutable audit trails and using structured logs, agents can safely manage credentials across multiple tools while maintaining real-time monitoring for anomalies.

Principle of least privilege: Each credential an agent uses should be scoped to only what’s needed for that task. A token that can write to a user's entire workspace should never be used to simply read analytics dashboards. Overbroad permissions widen the blast radius if the agent is compromised.

Preventing credential leakage: Secrets must never be logged, echoed, or embedded in error messages. In tool-calling agents, this becomes especially important since failures often involve tokens or keys passed through headers or request bodies.

Agents should sanitize logs by stripping out sensitive information before logging. This prevents accidental exposure in shared environments or observability tools.

Here’s an implementation that makes an authenticated request and logs errors with token redaction:

Expected log output:

Tool call failed. Auth token used: ************cdef

In a real agent, you'd store and retrieve the token from a vault rather than hardcoding it. But this example illustrates the core idea: redact sensitive strings before logging, and avoid printing raw tokens, headers, or request bodies.

This approach allows agents to remain auditable without risking credential leaks.

Monitoring agent behavior

Unusual tool-calling patterns should raise alerts. Spikes in API calls, repeated auth failures, or access outside expected hours may indicate a compromised agent or a misconfigured credential.

Security monitoring should log:

- Which agent accessed what service

- When and how often it was called

- Whether the call was successful

- What credential was used (by ID, not raw value)

This supports incident response and post-incident review across your authentication and authorization controls. With scoped access, redacted logs, and real-time monitoring, agents can authenticate safely, even across dozens of tools.

Conclusion: Building authentication for agent-based systems

Tool-calling agents like SupportBot represent a shift in how applications interact with external systems. They operate independently, span multiple services, and require secure, delegated access to APIs without user involvement. Traditional authentication flows, especially browser-based OAuth, weren’t designed for this model. The result is a growing gap between what agents need to do and what existing auth flows allow.

In this guide, we walked through the architecture of agent-initiated tool calls, the differences from user-driven API access, and the key patterns that support safe authentication: service-to-service auth, delegated token handling, secure key management, and scoped token workflows. We also covered how to coordinate authentication across APIs, databases, and SaaS tools, handle errors gracefully, and apply least-privilege principles with proper audit and monitoring.

If you're building AI agents that call APIs, this isn't an edge case; it's the foundation. Secure, scalable agent authentication is quickly becoming critical infrastructure. Rather than relying on one-off workarounds, the next wave of agent platforms will need modular, standards-aligned, and auditable auth layers that can grow with the complexity of agent workflows.

Start today by abstracting credential handling using Scalekit, eliminating static secrets, and following emerging standards. The sooner you decouple agents from user-centric login models, the faster you can build secure systems that scale.

FAQ

How can agents authenticate with external APIs without exposing secrets or relying on user interaction?

Agents should never store long-lived tokens or secrets locally. Instead, use a credential vault that supports runtime token retrieval across tools like databases, CRM platforms, and internal APIs. For user-delegated access, the vault securely stores and refreshes the token. The agent fetches scoped, short-lived credentials on demand, making calls stateless and secure even when users aren't present.

What’s the right way to handle multiple auth types (OAuth, API keys, service tokens) in one agent workflow?

Introduce an abstraction layer between the agent and the credentials. This could be a centralized vault or credential broker that exposes a consistent interface (e.g., getCredential(agentId, serviceName)) while internally resolving the right auth method. This lets secure AI agents remain agnostic to whether a tool requires an OAuth token, an API key, etc. It also preserves clear authentication and authorization boundaries.

How does Scalekit Agent Connect handle OAuth token refresh?

Scalekit automatically refreshes OAuth tokens in the background using stored refresh tokens. Once a user completes the initial grant, Agent Connect stores the access and refresh tokens securely. When an agent requests access, Scalekit ensures the returned token is fresh; agents never see refresh tokens or need to manage expiry windows themselves. These policies can be combined with tool exposure through an MCP server for uniform discovery and policy enforcement.

Can Scalekit help prevent token leakage or over-permissioning?

Yes. Scalekit enforces fine-grained access policies per agent and per credential. Agents only receive scoped, short-lived tokens based on the task context. All credentials are redacted in logs, never returned in plaintext outside of request scope, and every request is logged with audit metadata (agent ID, credential type, scope, timestamp).

How do I handle token expiration or failure mid-agent workflow?

Design agents to treat authentication errors, like a 401 Unauthorized, as recoverable signals. Use a retry logic that can fetch a fresh token from your vault. In multi-step workflows, ensure each API call requests a new token when needed, rather than assuming one token can span the full chain. Scalekit simplifies this by guaranteeing that every request to Agent Connect yields a fresh, scoped credential valid for immediate use.

Want to enable secure, autonomous tool calls by your AI agents? Sign up for a Free account with Scalekit and get scoped tokens, credential vaulting, and audit logging built in. Need help mapping tool schemas or integrating agent workflows? Book time with our auth experts.